How do you recreate the experience of an in-person screening room with a remote, distributed team? This is one of the reasons we built Dropbox Replay, our new video collaboration tool. Dropbox customers told us the shift to virtual work had turned their once-straightforward review sessions into lengthy, inefficient video calls. It was clear their previous in-person workflows hadn’t made an elegant transition online.

When we looked at the market for virtual screening tools, we mostly found expensive Hollywood-scale solutions or clunky DIY experiences run over video conferencing tools. Nothing quite offered the kind of collaborative, live, and synchronous playback experience we envisioned—something that would approximate the feeling of being together in a screening room, with shared feedback, cursors, and playback controls. We knew there was an opportunity for an accessible yet high-quality online screening experience where people could collaborate in realtime as they would in person, but virtually—and where everyone could see the same thing and seamlessly annotate what they were seeing in real time. We created Dropbox Replay’s Live Review feature in response.

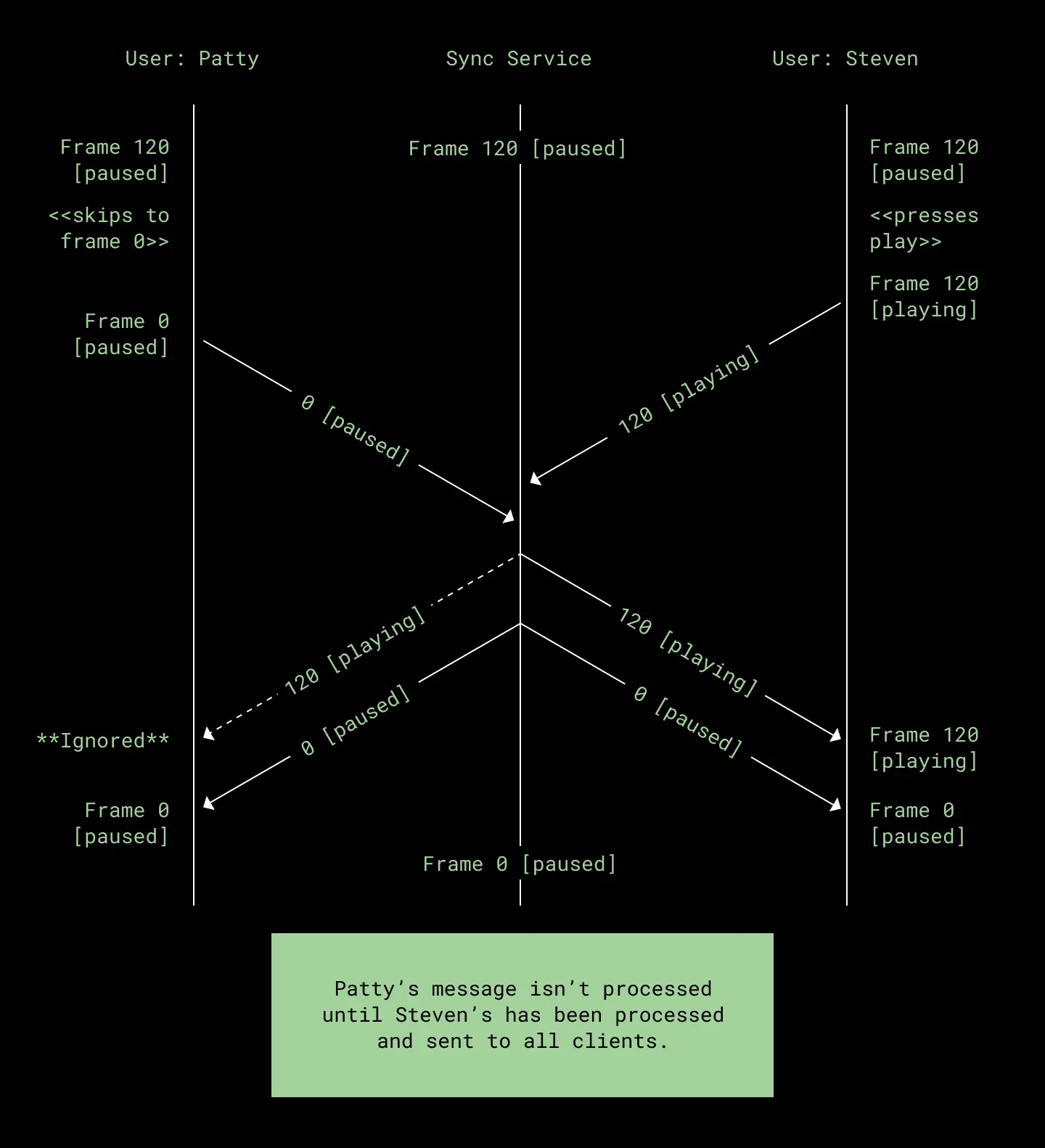

But keeping a virtual screening room with multiple collaborators in sync is a harder problem to solve than you might think. Anyone in a Live Review session can pause, adjust the playback speed, or scrub to a different frame at anytime. This is great for keeping screenings open and collaborative, but it also means that everyone might be sending conflicting commands at once. What if two people try to change the position of the video at the same time?

For Live Review to work, we need to make sure that everyone converges on the same playback state in a timely manner. When a person is ready to discuss a frame, they must be able to trust that everyone is seeing the same frame too.

Changing states

In a Live Review session, we care about two types of state—single client state and shared client state. The position of someone’s mouse cursor or the drawings they make on a video frame are examples of single client state. These can’t conflict with other clients, so they’re relatively easy to handle: changes in single client state are sent to the server, which echoes them to the other clients, and that’s that.

The shared client state, on the other hand, is what keeps local playback synchronized between all of the clients in a Live Review session. When someone joins a Live Review session, their client opens a WebSocket connection with a Dropbox Replay server (we use an open source Go library called Gorilla, which is already used elsewhere within Dropbox). Anytime someone presses play, pause, or changes the position of the video, the client sends a message conveying this change to the server, which updates the shared client state and then sends it to everyone else in the session.

The playback state is encoded using Protocol Buffers, which offer an extensible, schematized, and space-efficient way to package the data. We like the combination of Protocol Buffers and WebSockets; Protocol Buffers don’t concern themselves with message framing and leave the format on the wire up to the developer. WebSockets, meanwhile, are largely content-agnostic and offer text or binary messages that are framed, delivered reliably and in order, and even take care of ping/pong heartbeats, which can keep sessions alive through proxies or firewalls that might otherwise terminate an idle connection.

In a perfect world, only one person would interact with the video at a time. But that’s not how most screenings work. Everyone has something to say, or something they want the group to see—often at the same time! It’s precisely because anyone can influence playback at any time that our protocol must be resilient enough to handle multiple concurrent interactions and still produce a consistent outcome for all participants.

Establishing an order of events

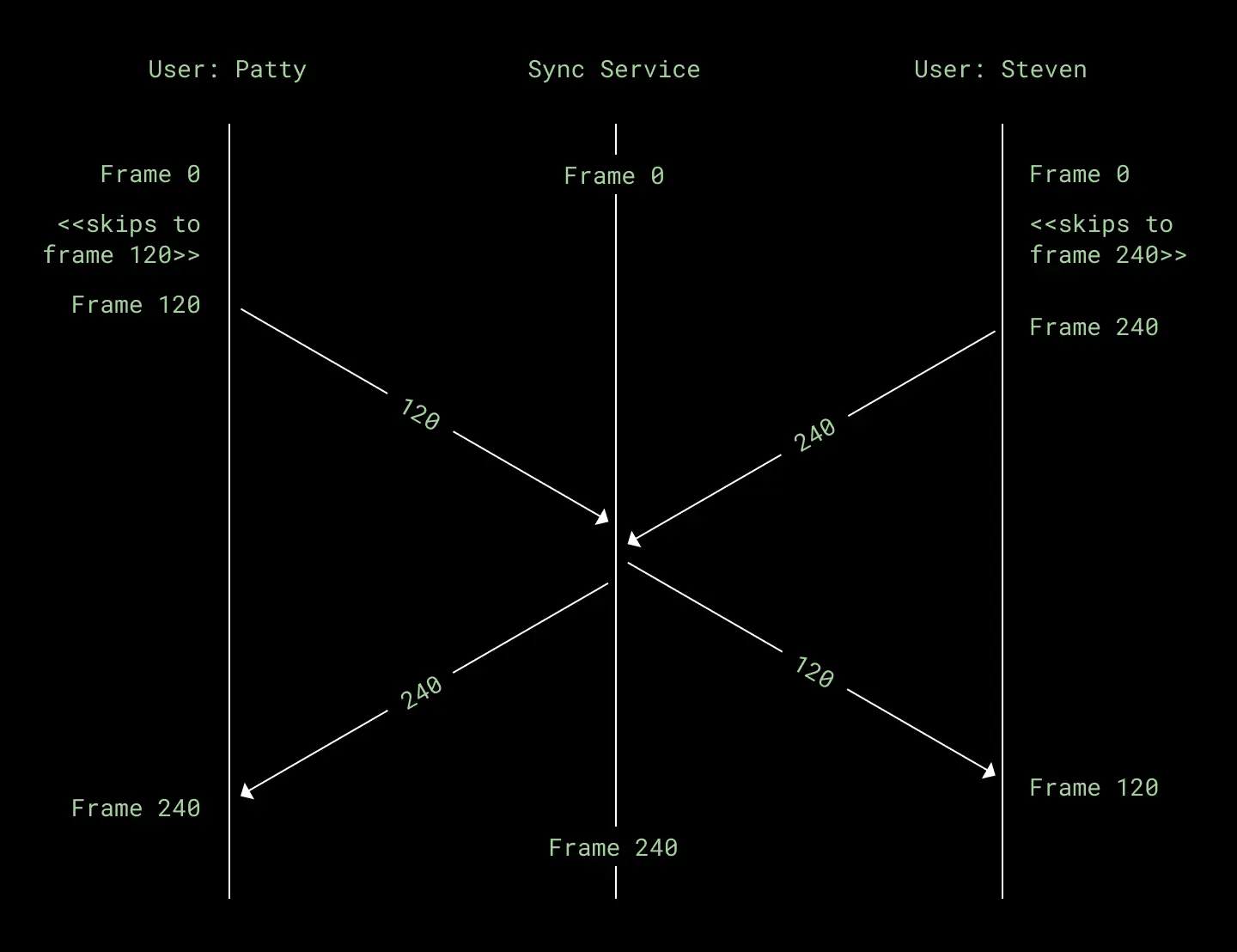

One approach might be to simply send all state changes—“video paused at frame” or “cursor is now at position”—to all other clients. However, when the state is shared between clients you can quickly see how this approach will lead to inconsistent states if more than one client changes the state at the same time—for example, if Patty makes a change, sends it to Steven, but Steven makes and sends a change before Patty’s change has arrived.

As you can see in the previous diagram, this approach could quite easily result in Patty and Steven adopting each other’s state and losing their own. The clients will end up out of sync. As far as Patty is concerned, she skipped to frame 120, and then Steven skipped her to frame 240. But as far as Steven is concerned, he skipped to frame 240 and then Patty skipped him to frame 120. How can we stay in sync when this happens?

We could try to add timestamps to messages so that each client can determine if they received an old message—and therefore know the message can be ignored—but this would rely on every client’s clock being synchronized. Since we probably don’t need to know the exact time when a particular event was sent, what if we simply delegated this job to the server instead?

We found we could leverage our server-side sync service to establish a logical clock—and more precisely, a happened-before relationship with the messages it receives from each client in a Live Review session. Individually (i.e. without a server), clients would have to have very precise clocks or engage in a more complicated negotiation to establish that same happened-before relationship. But by simply sending messages to the server and letting the server establish local event precedence—which it then broadcasts to all clients—the result is a simpler approach that achieves the same outcome.

Good, but not great

While WebSockets already guarantee in-order message delivery, what’s left is to ensure that the server processes the incoming messages in the right order. How did we achieve that in our server?

type PlaybackState struct {

frame int64

rate float32

}

...

// Each connection is assigned a unique sessionId

// Handled by a seperate Goroutine

// Protobuf messages are decoded from binary websocket messages

func handleMessage(msg *SyncMessage, sessionId string) {

switch messageType := msg.GetMessageType().(type) {

...

case *Message_Playback: {

// mutex is a sync.RWMutex

mutex.Lock() // Lock the state or wait if another session is holding the lock

// Update the state

playbackState = PlaybackState{

frame: frame,

rate: rate,

}

// Send the new state to all users, including the sender

SendMessageToAllIncludingSender(sessionId, msg)

mutex.Unlock() // Unlock only once we've sent the message to all clients

}

...

}

}Our sync service guarantees a response to each playback message will be sent to all clients before it starts processing the next message, therefore establishing a canonical happened-before relationship. But it also echoes messages back to the client that sent them so that we can communicate the timeline to them, too. Let’s look at why this matters:

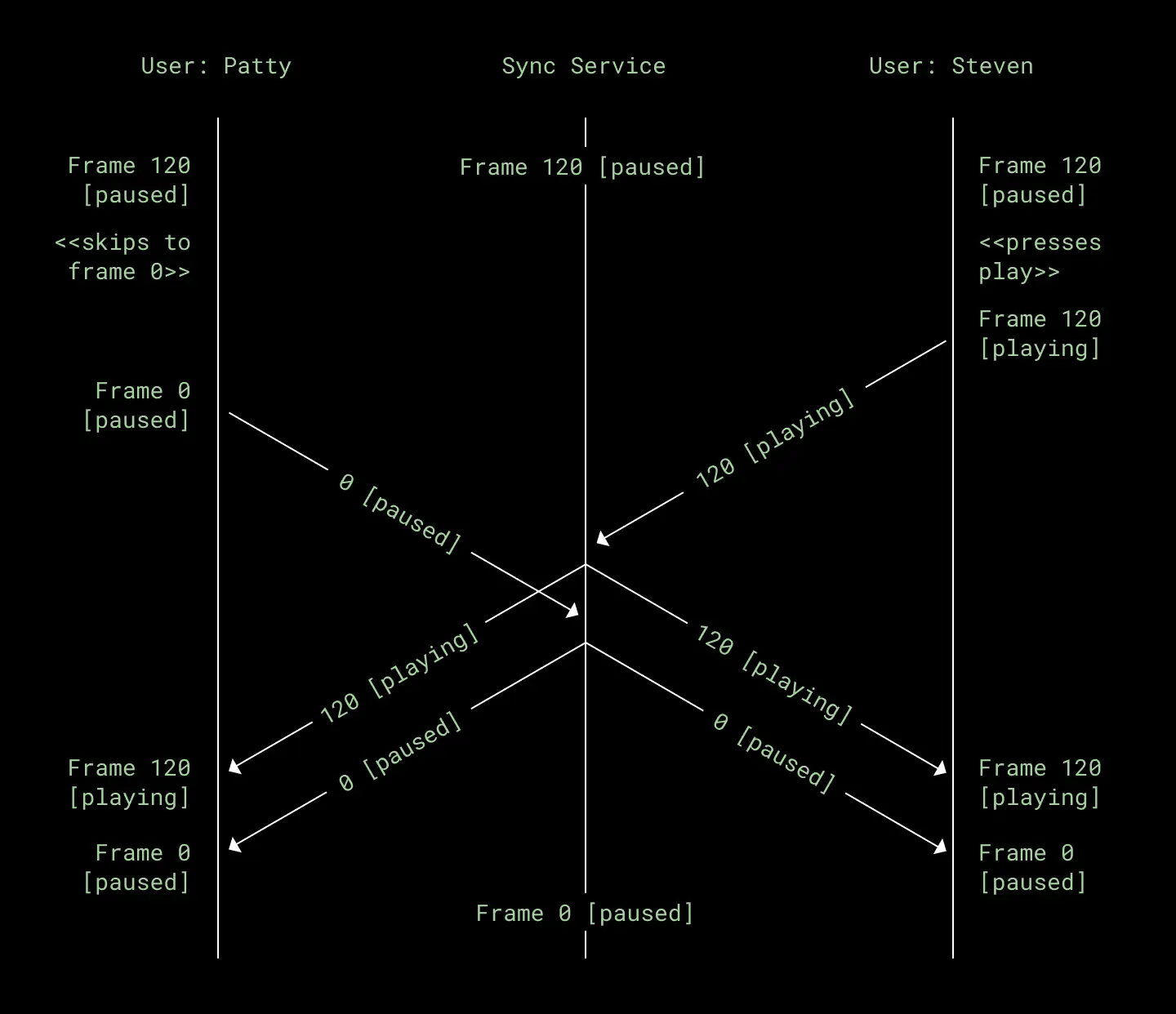

As you can see, by echoing their messages back to them, Patty and Steven now both end up at the same place. This is good! However, while we now have our clients converging on the same state, they still take different paths. This isn’t necessarily bad, but let’s look at the experience from both sides.

For Steven:

- He starts paused on frame 120

- He then presses play

- The video plays

- Then he sees that Patty paused the video on frame 0—and his video matches

For Patty:

- She starts paused on frame 120

- She skips her video to frame 0 and remains paused

- Because of Steven’s message, her video then starts playing from frame 120

- But then a short time later her video skips back to frame 0 and remains paused

A decent experience for Steven, but a surprising and clunky experience for Patty. We can do better!

A smooth experience for all

We mentioned before that our server-side sync service acts as a logical clock for these playback messages. It ensures that the order in which it receives a message is reflected in the order it sends that message back out to each and every client—including the client that sent the message in the first place. Can we make use of this to improve the experience for Patty? It turns out we can.

For every state change message Patty receives between the moment she skips to frame 0 and when she receives her own message back from the server, we know something very important: They all must have happened before Patty changed the frame.

How do we know this? The sync service processes and responds to each message one at a time—and in order. This means that, by design, any message that Patty receives before her own message is echoed back must have happened before her initial message, and thus her client can safely ignore it.

What does this look like?

Now let’s look at the experience from both sides again:

For Steven:

- He starts paused on frame 120

- He then presses play

- The video plays

- Then he sees that Patty paused the video on frame 0—and his video matches

For Patty:

- She starts paused on frame 120

- She skips her video to frame 0 and remains paused

Finally, a smooth experience for both Steven and Patty.

Try it out for yourself

There were, of course, other approaches we could have used to keep everyone in sync. We could have built a system to record, forward and replay events across multiple clients, or used an existing synchronization algorithm—such as operational transformation—to achieve the same result. But while these approaches would have technically been able to keep all clients in sync, it was just as important that, whatever the solution, the Live Review experience feel good for our users, too. By prioritizing how the user would experience a Live Review session, we ended up with a simpler algorithm than if we’d focussed on the engineering problem alone.

We think the resulting experience is smooth and seamless—exactly what you want while receiving feedback on your latest creation. But don’t take our word for it. Dropbox Replay is now available in beta for you to try for for free! Be sure to start your own Live Review session and tell us what you think.

Also: We’re hiring!

Do you love to build new things? Are you a curious engineer with a passion to see where a new idea takes you? Dropbox is hiring!

The Replay team is a small, nimble and creative force. We are focused on pushing boundaries and obsessed with building products that make our users’ lives easier and more productive. We're always on the lookout for clever, curious engineers who want to learn new things, take on bigger challenges, and build products our customers love. If you're an engineer with talent, passion, and enthusiasm, we'd love to have you at Dropbox. Visit our careers page to apply.

Special thanks to the Dropbox Replay team.