Two years ago, we shared the Engineering Career Framework we use at Dropbox. The framework is intended to help our engineers have greater impact in their roles and on their teams. For each role, we outline Core Responsibilities, or CRs—key behaviors that define what impactful work looks like and how to deliver it. We hoped that by providing consistent expectations for each level, we could better help Dropboxers grow in their engineering careers. The framework is now widely used as a reference during interviewing and hiring, performance reviews and calibrations, and the rating and promotion process.

We recently refreshed this framework to address some specific needs and reduce ambiguity. Along with sharing the updates, we wanted to go into a little more detail about how we manage this framework and what the update process is like. We used these updates for the 2022 review and calibration cycle, and feedback indicates that the changes did improve the clarity and accuracy of the framework descriptions—though some issues remain. Our work here is not finished.

We have updated the originally published framework in place, but to summarize:

- We added both technical craft expectations for engineering managers, and business acumen expectations for managers and many higher-level roles.

- We clarified and expanded on expectations for decision-making, collaboration, and contributions to organizational health.

- We strengthened and clarified the expectations for ownership over code, processes, and operational systems.

We hope that sharing some of our thought process here may prove useful to others undertaking a similar project. We’ll talk about why we decided to make the update and what changes we focused on for this round of changes. Then we’ll explain how we deployed the framework operationally, and our new technical approach for making updates, now and in the future. Finally, we’ll look in more detail at the feedback we received and the path ahead.

Why update the framework?

In late 2021, Dropbox staff engineers gathered for our annual summit where we assessed the state of engineering at Dropbox. Our goal was to identify concrete things we could improve. Two relevant initiatives came out of that effort:

- Update the CRs to promote engineering efficiency

- Provide clearer paths and CRs to help senior engineers grow

These initiatives were based on the perception that reviews and promotions were too heavily biased towards big, complex, or high-profile achievements. Taking ownership, making tough decisions, or doing so-called keeping-the-lights-on (KTLO) work is no less important, but was often deemed less impactful. We heard this resulted in a tendency to build the wrong things or build them in the wrong way.

We also received repeated feedback that the CRs were not as helpful for senior engineers looking to understand their performance relative to expectations. This was especially the case for more experienced engineers looking for paths to promotion.

Several high-level engineers and engineering managers (EMs) formed a working group to update our framework accordingly. This was done in coordination with a broader Dropbox-wide initiative to refresh our CRs to take into account Virtual First, as well as the critical role that managers play in building and sustaining successful cultures.

Because the CRs explicitly state the expectations of engineers at all levels, they can be a powerful lever for affecting cultural change. We had the explicit goal of better rewarding the kinds of engineering behaviors that we wanted to see, and more clearly defining the different roles or archetypes more senior engineers can function in and how those map to the CRs. Specifically, we wanted promotion evaluations to favor a variety of possible contributions, rather than over-indexing on the building of large, complex things.

What changes did we make?

We started with a survey of engineers on how well the CRs mapped to the work they were doing and how they were evaluated. Of 106 respondents, more than a quarter reported they didn’t feel the CRs reflected the work they were doing on a daily basis. More than a fifth reported that they didn’t clearly understand what was expected to get to the next level. While the career framework explicitly states that it is not intended to be a checklist for promotion, the fact remains that because it is the canonical source of level expectations, some people will use it in this way.

Much of the feedback we received centered on how the framework didn’t effectively capture how different CRs were weighted at review time, and how recognition for chores like on-call toil, KLTO, glue work, and documentation were under-valued. We also heard a desire for increased clarity around how more senior engineers (IC4+) could fulfill various roles or archetypes. For instance, how might a specific IC4 who is functioning more as a tech lead be evaluated fairly in comparison with an IC4 who is functioning more as an architect?

To address this feedback, we made numerous minor updates to the CRs and descriptions, particularly at higher levels, in order to encourage the behaviors we want engineers to exemplify. For example:

- Before, IC3 software engineers didn’t have clear expectations of what ownership looked like at that level, so we added the following craft CR: “I look for ways to reduce future toil and tech debt for existing components my team owns.”

- To ensure security-by-design, we often ask IC4 security engineers to collaborate with other teams during the early stages of an effort—but this wasn’t expressed in the CRs and wasn’t being assessed consistently in calibrations. We added the following culture CR: “I am effective at working with cross-functional stakeholders to identify technical blindspots and clarify ambiguity in their ideas.”

- IC3+ software engineers didn’t have guidance about expectations for their role within the larger organization beyond their team, so we added a business acumen CR, tailored per level. This is an example of what it looks like for an IC4: “I have a working knowledge of Dropbox’s org/team structure and how teams work together across Dropbox, and am able to help my team effectively collaborate effectively with other teams across our org.”

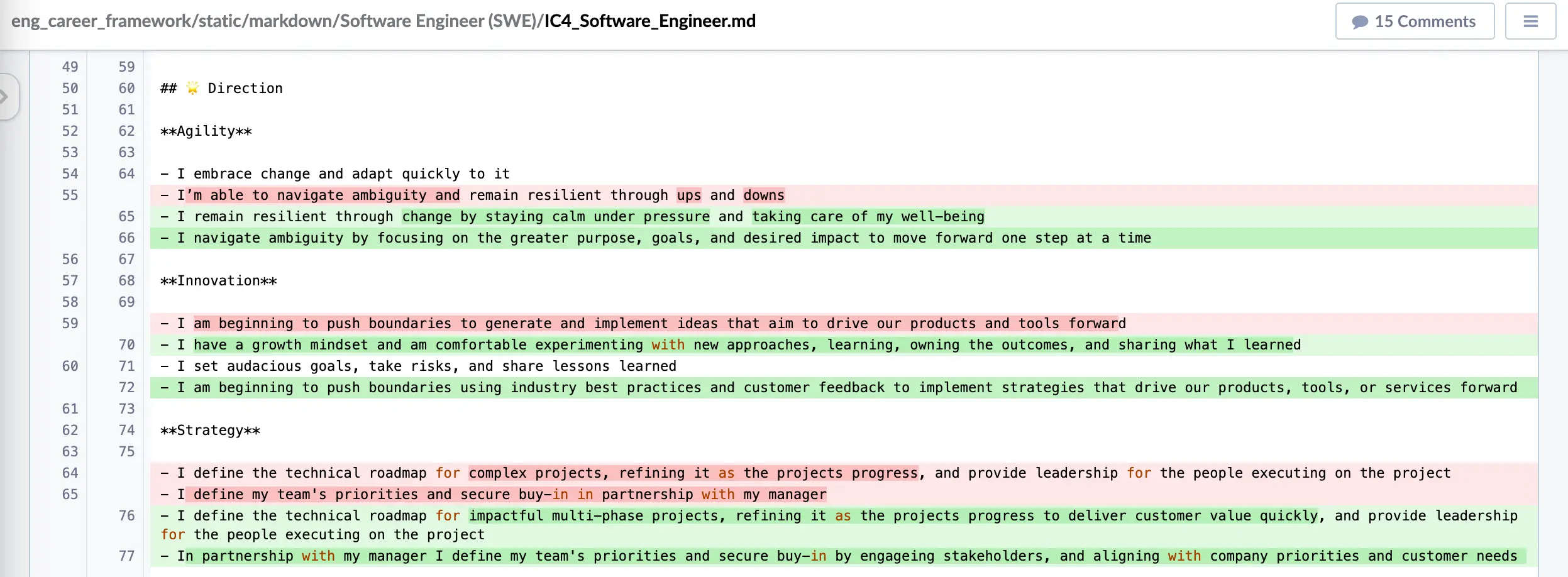

A sample of some of the updates to the IC4 Software Engineer CRs that shows the level of thought and work that goes into modifying each line

We also added two special sections to the appendix of the framework. One explains and highlights different Engineering Archetype behaviors—heavily derived from Will Larson’s definitions—and how they map to levels and CRs. The other provides context and clarification around the CRs more generally and dispels myths about how they are to be used. The goal of these sections is to add archetypes and related behaviors to the language of growth and evaluation here at Dropbox, and to be a single source for greater clarity on how evaluations actually work.

Operational approach

We wanted to deploy the updated framework quickly so that we could see the benefit from the changes. However, we saw early on that suddenly changing the evaluation criteria for anyone would feel like moving the goalposts mid-cycle, and therefore not align with one of our company values, Make Work Human. So our working group coordinated with the People team and established a plan for previewing changes early, before the mid-year lightweight review cycle, in order to get feedback and encourage engineering ICs and EMs to start aligning with the new descriptions.

After the mid-year cycle, we incorporated the feedback, then heavily promoted and circulated the updates—via Slack announcements, emails, engineering all-hands meetings, etc. This was to ensure everyone was aware of the changes and had plenty of lead time to incorporate them into their models of how they and others would be evaluated. We wanted to make sure that nobody felt like we were moving the goalposts on them, even while we worked out the kinks and clarified things in response to feedback.

Here’s our plan, right out of our working group coordination doc:

-

Establish working group and work streams -

Collect feedback via survey -

Update and iterate on CRs -

Share with VPs and HR to get early feedback and update -

Collect feedback from engineering leadership team and update -

Share internally with all of engineering -

Incorporate any feedback and publish final updates - Publish externally

Technical approach

With these changes in mind—and looking ahead to future iterations of this framework—we also went looking for a better way to manage updates to the underlying documents. We wanted a system that would, among other things, let us:

- facilitate the review and approval process

- preserve a history of changes over time and allow comparison between versions and related roles

- easily (re)generate content based on controlled source files

- have multiple, simultaneous, independent updates exist in various stages of the process

Naturally, if you put a bunch of engineers and EMs in a room and give them requirements like this, their solutions will inevitably involve bespoke programming and source control—and we are no exception. Early proposals got fairly sophisticated; at one point, we considered modeling each role as a class hierarchy and then generating comparisons programmatically. But in the end, we took our own advice—impactful work doesn’t always have to be big or complex—and settled for something simple.

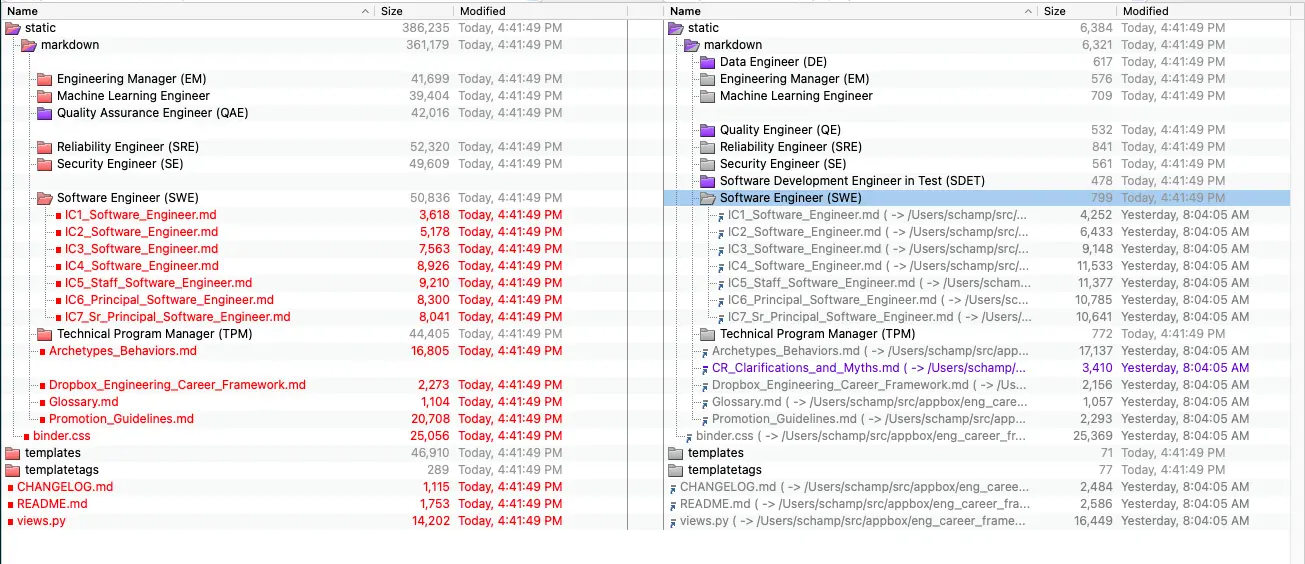

We set up an internal git repository to contain the text content of the framework, and a bare-bones Django site for simple internal hosting. The underlying documents are stored in a folder hierarchy of markdown files, organized by role. Each file is a complete, self-contained description of the CRs for a single role and level (for example, “IC5 Staff Security Engineer”).

Using a comparison tool to assess changes to the framework in the process of writing this article

Storing the CRs as markdown files in a git repository comes with some big advantages. They are easily rendered, but the source is also human readable and diff-able. No external database, CRM, or other system is necessary to maintain the contents outside of the source tree; updates can be controlled according to normal source code review processes. And when someone decides we need to change how we present the framework, the markdown files are easily portable to any number of static site generation systems.

Here’s our guidance for any Dropboxer who wants to update the framework, directly from the README.md file:

The Engineering Career Framework is a living document that will evolve over time, and anyone within engineering at Dropbox should feel empowered to suggest changes.

For lightweight changes such as syntax or formatting changes, a simple diff should be reviewable by the members of the Engineering Career Framework working group and result in changes being visible by the next deployment [of our the internal tool].

Larger-scale changes such as significant rewording, adding/removing copy, or level/role changes will involve multiple levels of approvals. While these cannot be merged through a single diff approval, the diffs themselves can be used as the first step in surfacing where the engineering community at large feels there is a gap between the CRs and their day-to-day responsibilities.

With this system in place, each individual update to the CRs can be made with the following process:

- Create a diff with the desired changes and circulate to stakeholders.

- Review the changes with stakeholders and respond to feedback.

- Update the changelog with a description of the changes, then merge the diff.

- Deploy the updated source to the internal mini-site.

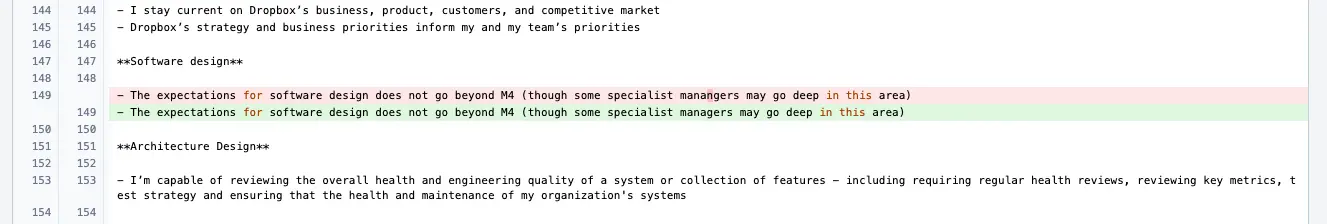

Example of a diff correcting a typographical error

We implemented the changes described above as we worked the kinks out of the process. We found that minor, non-material changes (like correcting typographical errors, removing duplication, etc.) could easily be applied with a relatively light review, while more substantial updates could be reviewed with increased rigor, as appropriate.

When it came time to publish, we were able to export a static version of the framework and update our externally-facing repo and site. The externally published version is for transparency and the benefit of the community at large. Naturally, we don’t take pull requests on it.

Results and path forward

After launching this framework internally and using it for an entire full-year review cycle, our working group again ran a survey asking engineers who used the framework about the changes. We didn’t have as many respondents as the first survey, but nine out of ten agreed that the updated framework better reflects the work they are doing at their level, which is confirmation that this round of updates is a step in the right direction.

We also received a lot of valuable comments. Some suggested the new archetypes are valuable, but could use more clarity around how they tend to be expressed in lower levels. Others felt the CRs are still too wordy and vague (most of this update cycle was spent adding clarification, not trimming and streamlining). And engineers still wanted a clearer explanation of the differences between levels (while the files are structured for easy comparison using a comparison tool, it would be better to make this a first-class capability of the presentation system).

After going through this whole process, we found some downsides to using markdown, too. The markdown table syntax, while easily human-readable, is not at all conducive to tracking changes across large tables full of text—as we discovered the hard way. Reformatting the pages to avoid the use of tables mitigated this, but we had plenty of feedback that removing the tables made the text harder to ingest and compare.

We also discovered the joys of maintaining large blocks of text in source control, and trying to decide whether to keep things in single lines, or apply line breaks. Late in the game we discovered SemBr, an approach for breaking up text with line breaks on semantic boundaries for easier comparison. We are looking to adopt this moving forward as the framework continues to evolve.

Finally, we found that because so many of the CRs across roles have similar language, updating wording can be cumbersome, both for implementation and review. Storing each individual role as separate text allows for easy tailoring, but makes maintaining consistency more costly. We’re still exploring ways to do both, but we haven’t landed on a solution for this yet.

Conclusion

Besides executing an update to the CRs, one of our major goals of this working group was to put in place a system to make future updates easier to execute, so that the CRs could continue to be adapted more quickly in response to the needs of the engineering organization. We think we’ve achieved that goal. Since the adoption of the framework, we’ve added two new roles to the CRs—Software Development Engineer in Test (SDET) and Data Engineer—and have been able to make a number of minor corrections in response to feedback from the review cycle.

The twice-yearly review cycle and the need for a slow and internally publicized rollout for major updates has not changed. But we now have a system for proposing and reviewing changes—together or in a batch—which can reduce the time it takes to make updates, and allow more time for publishing and reorienting teams before the next review.

If you haven't already, have a look at our updated Engineering Career Framework for yourself.

If building innovative products, experiences, and infrastructure excites you, come build the future with us! Visit dropbox.com/jobs to see our open roles, and follow @LifeInsideDropbox on Instagram and Facebook to see what it's like to create a more enlightened way of working.

Dropbox Dash: Find anything. Protect everything.

Dropbox Dash: Find anything. Protect everything.