Background: HTTP/2 and Dropbox web service infrastructure

HTTP/2 (RFC 7540) is the new major version of the HTTP protocol. It is based on SPDY and provides several performance optimizations compared to HTTP/1.1. These optimizations include more efficient header compression, server push, stream multiplexing over the same connection, etc. As of today, HTTP/2 is supported by major browsers.

Dropbox uses the open-source Nginx to terminate SSL connections and perform layer-7 load balancing for web traffic. Before the upgrade, our front-end servers ran Nginx 1.7-based software and supported SPDY. Another motivation for the upgrade is that Chrome currently supports SPDY and HTTP/2 but they will be dropping SPDY support on May 15th. If we don’t support HTTP/2 at that time, our Chrome clients would go from using SPDY back to HTTP/1.1.

The HTTP/2 upgrade process

The HTTP/2 upgrade was a straightforward and smooth transition for us. Nginx 1.9.5 added the HTTP/2 module (co-sponsored by Dropbox) and dropped SPDY support by default. In our case, we decided to upgrade to Nginx 1.9.15, which was then the latest stable version.

The Nginx upgrade involves making simple changes in configuration files. To enable HTTP/2, the http2 modifier needs to be added to the listen directive. In our case, because SPDY was previously enabled, we simply replaced spdy with http2.

Before (SPDY): listen A.B.C.D:443 ssl spdy;

After (HTTP/2): listen A.B.C.D:443 ssl http2;

Of course, you probably want to go through the complete Nginx HTTP/2 configuration options to optimize for the specific use cases.

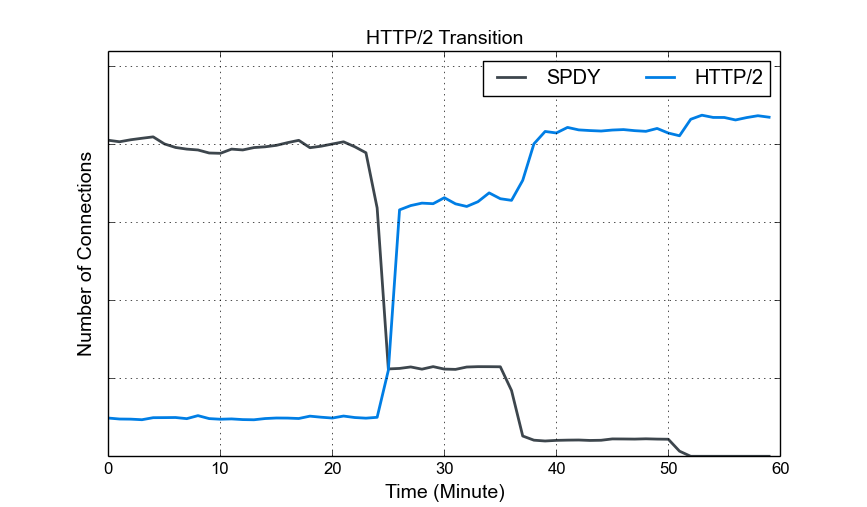

As for deployments, we first enabled HTTP/2 on canary machines for about a week while we were still using SPDY in production. After verifying the correctness and evaluating the performance, HTTP/2 was enabled across the fleet for our web services.

The figure above shows the smooth transition from SPDY to HTTP/2. The remaining HTTP/1.1 connections are not shown in this figure. We gradually enabled HTTP/2 across all front-end web servers around minute 23, 36, and 50. Before that, the connections include both HTTP/2 traffic in the canary machines and SPDY traffic in production machines. As you can see, roughly all the SPDY clients eventually migrated to HTTP/2.

Observations

Performance improvement

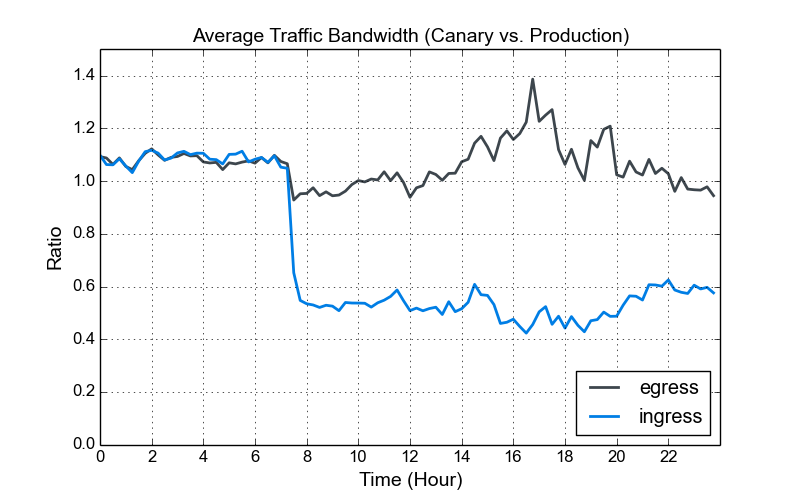

We have seen a significant reduction in the ingress traffic bandwidth, which is due to more efficient header compression (HPACK).

The figure above shows the ratio of average (per machine) traffic bandwidth between the canary and production machines, where HTTP/2 was enabled only on canary machines. Every canary or production machine received approximately the same amount of traffic from load balancers. As can be seen, the ingress traffic bandwidth was reduced significantly (close to 50%) after we enabled HTTP/2. It is worth noting that although we enabled SPDY previously in all canary and production machines, we did not turn on SPDY header compression due to the related security issues ( CVE-2012-4929 aka CRIME). As for egress traffic, there was no significant change because headers typically contributed to a small fraction of the response traffic.

A couple of caveats

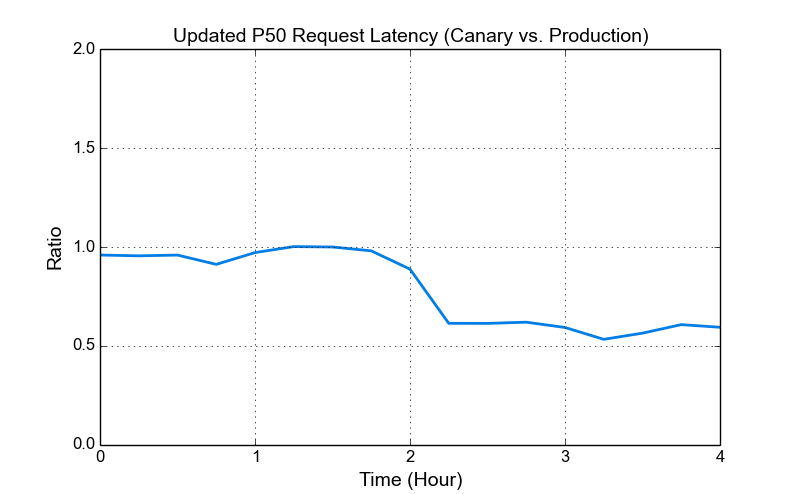

Update: the issues with the increased POST request latency and refused stream errors were resolved in the recent Nginx 1.11.0 release. The figure of the updated P50 request latency after applying this change is at the end of this post.

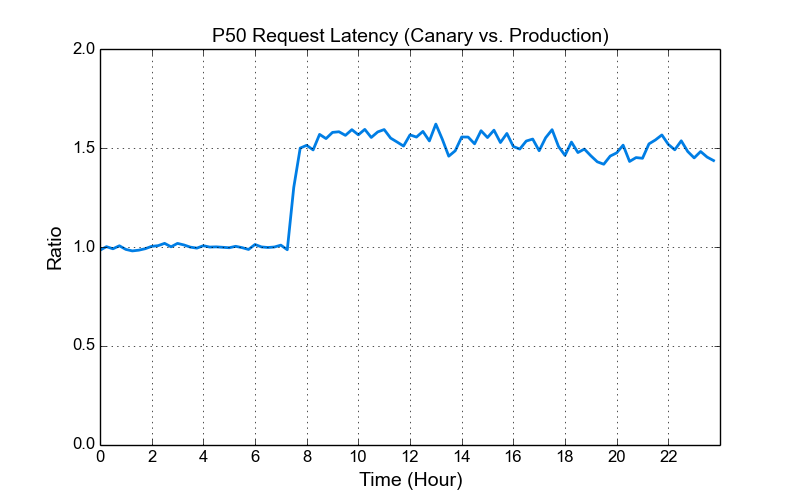

Increased latency for POST requests. When we enabled HTTP/2 on the canary machines, we noticed an increase in median latency. The figure below shows the ratio of P50 request latencies between canary and production machines. We investigated this issue and found that the increased latency was introduced by POST requests. After further study, this behavior appeared to be due to the specific implementation in Nginx 1.9.15. Related discussions can be found in the Nginx mailing list thread.

Note that the increased P50 request latency ratio we see here (approximately 1.5x) depends on the specific traffic workload. In most cases, the overhead was about one additional round trip time for us, and it did not impact our key performance much. However, if your workload consists of many small and latency-sensitive POST requests, then the increased latency is an important factor to consider when upgrading to Nginx 1.9.15.

Be careful with enabling HTTP/2 for everything, especially when you do not control the clients. As HTTP/2 is still relatively new, from our experience, some clients/libraries and server implementations are not fully compatible yet. For example:

- With Nginx 1.9.15, clients could get refused stream errors for POST requests if they attempt to send DATA frames before acknowledging the connection SETTING frame. We have seen this issue with the Swift SDK. It is worth noting that monitoring Nginx error logs is crucial during deployments, and this specific error message requires increasing the error log severity to INFO.

- Chrome did not handle RST_STREAM with NO_ERROR properly and caused issues (Chromium Issue #603182) with Nginx 1.9.14. A workaround has been included in Nginx 1.9.15.

- Nghttp2 did not send END_STREAM when there was no window space, and it was also discussed in the aforementioned Nginx mailing list thread.

Because our API users may employ various of third-party HTTP libraries, we need to perform more extensive testing before enabling HTTP/2 support for our APIs.

Debugging tools

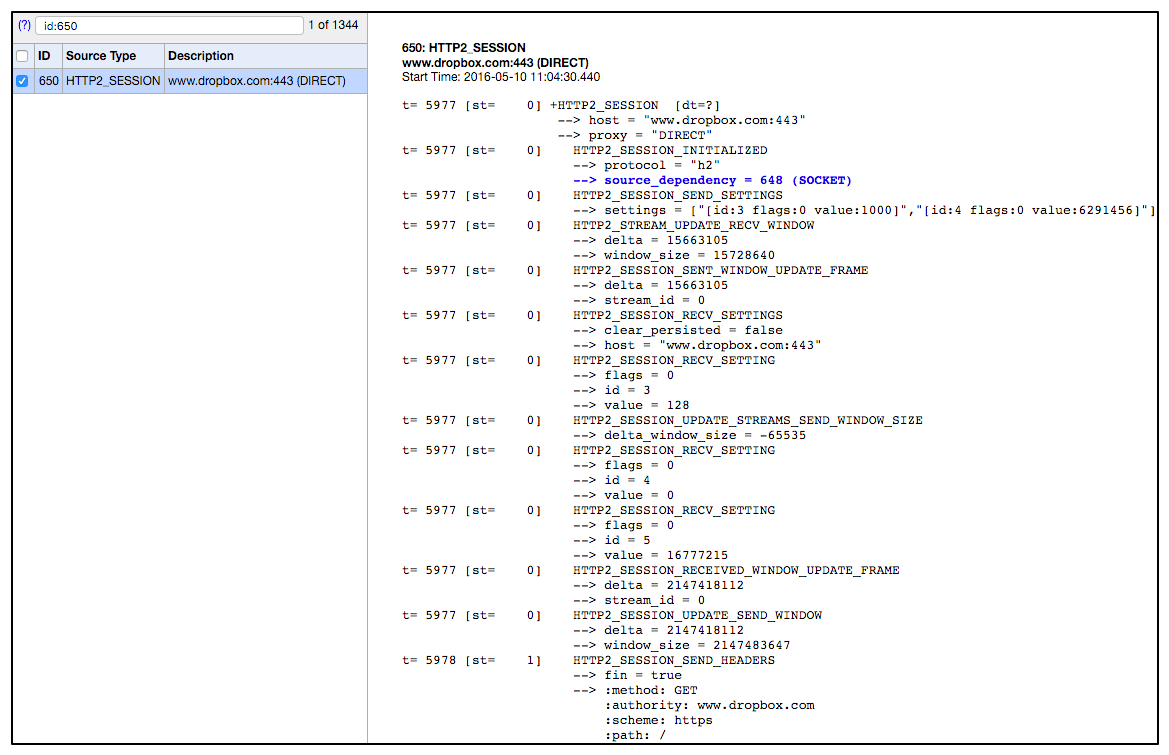

CloudFlare has presented a nice summary of HTTP/2 debugging tools. In addition, we found the Chrome net-internals tool (available at chrome://net-internals/#http2 in Chrome) to be helpful. The figure below is a screenshot of frame exchanges reported by net-internals when opening a new HTTP/2 session to www.dropbox.com.

Overall, we made a smooth transition to HTTP/2. The following are a few takeaways from this post.

- It was simple to enable HTTP/2 in Nginx.

- Significant ingress traffic bandwidth reduction because of header compression.

- Increased POST request latency due to the specific HTTP/2 implementation in Nginx 1.9.15.

- Be careful with enabling HTTP/2 for everything as implementations are not fully compatible yet.

- Canary verification and Nginx error log examination could help catch potential issues early on.

We hope this post is helpful for those who are interested in enabling HTTP/2 for their services or those interested in networking in general. We would also like to hear your feedback in the comments below.

Update: the issues with the increased POST request latency and refused stream errors were resolved in the recent Nginx 1.11.0 release. The figure below shows that the P50 request latency ratio (canary vs. production) decreased after applying the change in canary machines. Note that in this figure, the P50 request latency in production was increased previously when we upgraded to nginx 1.9.15 to support HTTP/2.

Contributors: Alexey Ivanov, Dmitry Kopytkov, Dzmitry Markovich, Eduard Snesarev, Haowei Yuan, and Kannan Goundan

We're hiring!

Do you like traffic–related stuff? Dropbox has a globally distributed edge network, terabits of traffic, millions of requests per second, and a small team in Mountain View, CA. The Traffic team is hiring both SWEs and SREs to work on TCP/IP packet processors and load balancers, HTTP/2 proxies, and our internal gRPC-based service mesh. Not your thing? We’re also hiring for a wide variety of engineering positions in San Francisco, New York, Seattle, Tel Aviv, and other offices around the world.