Update (November 14, 2017): Miami, Sydney, Paris, Milan and Madrid have been added to the Dropbox Edge Network.

Since launching Magic Pocket last year, we’ve been storing and serving more than 90 percent of our users’ data on our own custom-built infrastructure, which has helped us to be more efficient and improved performance for our users globally.

But with about 75 percent of our users located outside of the United States, moving onto our own custom-built data center was just the first step in realizing these benefits. As our data centers grew, the rest of our network also expanded to serve our users — more than 500 million around the globe — at light-speed with a consistent level of reliability, whether they were in San Francisco or Singapore.

To do that, we’ve built a network across 14 cities in seven countries on three continents. In doing so, we’ve added hundreds of gigabits of Internet connectivity with transit providers (regional and global ISPs), and hundreds of new peering partners (where we exchange traffic directly rather than through an ISP). We also designed a custom-built edge-proxy architecture into our network. The edge proxy is a stack of servers that act as the first gateway for TLS & TCP handshake for users and is deployed in PoPs (points of presence) to improve the performance for a user accessing Dropbox from any part of the globe. We evaluated some more standard offerings (CDNs and other “cloud” products) but for our specific needs this custom solution was best. Some users have seen and have increased sync speeds by as much as 300 percent, and performance has improved across the board.

2014: A Brief History

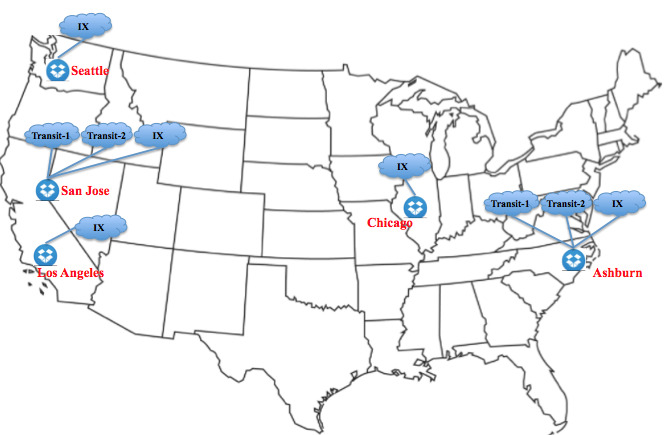

Going back to 2014, our network presence was only in the US. With two data center regions (one on each coast) storing all our user data, and five network PoPs in major cities across the country where we saw the most traffic. This meant that users across the globe could only be served from the US, we were heavily reliant on transit, and often higher latency paths across the Internet limited performance for international users.

Each PoP was also connected to the local Internet Exchange in the facility where it was located, which enabled us to peer with multiple end-user networks also connected to the exchange. At this time we peered with only about 100 networks, and traffic was unevenly spread across our PoPs, with some seeing much more ingress and egress traffic than others over both Peering and Transit links. Because of this traffic pattern, we relied mostly on Transit from tier-1 providers to guarantee reliable and comprehensive connectivity to end users and allow a single point of contact during outages.

Our edge capacity was in the hundreds of gigabits, nearly all of which was with our transit providers and shifting traffic between PoPs was a challenge.

Dropbox Routing Architecture

In 2014 we were using Border Gateway Protocol (BGP) at the edge of the network to connect with the transit and fabric peers in our network, and within the backbone to connect to the data centers. We used Open Shortest Path First (OSPF) as the underlying protocol for resolving Network Layer Reachability Information (NLRI) required by BGP within the Dropbox network.

Within the routing policies, we were using extended-BGP-communities which are tagged to prefixes within the network as well as prefixes learned from peers like transit and fabric. We also use various path attributes in the BGP protocol suite that are used for selecting an egress path for a prefix if more than one path exists.

2015: The year of planning, cleanups

In early 2015, we overhauled our routing architecture, migrating from OSPF to IS-IS, changing our BGP communities, and implementing MPLS-TE to improve how we utilized our network backbone. The latter is an algorithm that provides an efficient way of forwarding traffic throughout the network, avoiding over-utilized and under-utilized links. This improved how our network handled dynamic changes in traffic flows between the growing number of network PoPs. More details on these changes will be covered in a future Backbone Blog.

By mid-2015, we started thinking about how we could serve our users more efficiently, reduce round trip time and optimize the egress path from Dropbox to the end user.

We were growing rapidly outside of the U.S., and started focusing our attention on the European market, specifically looking for locations where we could peer with more end user networks in the region. We selected three European PoPs which provided connectivity to the major peering exchanges and ambitiously expanded our peering edge in both North America and Europe. Our peering policy is open and can be referenced here: Dropbox Peering Policy.

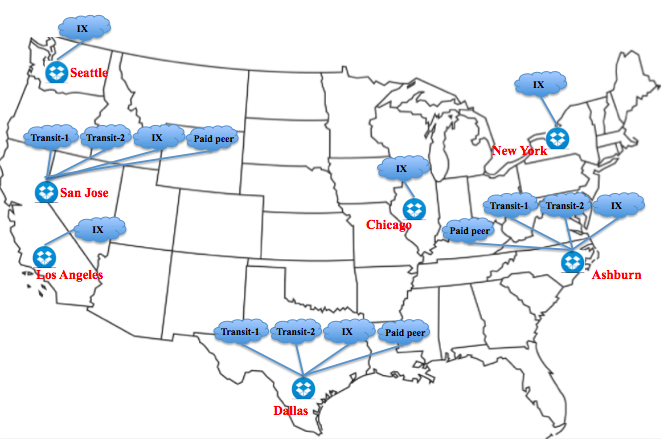

By the end of 2015, we added three new PoPs at Palo Alto, Dallas and New York, along with hundreds of gigabits of transit capacity, and we increased both the number of peer networks, and our traffic over peering connections substantially. Though we were still predominantly relying on our transit partners, our expanded peering footprint, geographically and in terms of capacity, allowed us to implement more extensive traffic engineering to improve user performance. It also laid the foundation for our new edge proxy design.

2016: The year of expansion, optimization and peering

As we started 2016, we sharpened our focus on three key areas:

- Deploying new PoPs across Europe and Asia to get closer to the user and start improving the sync performance

- Designing and building the custom architecture that would enable faster network connections between our PoPs, including new edge proxy stack architecture, new routing architecture and standardized/optimized IP transit architecture

- Establishing new peering relationships to increase the peering footprint in our network

Expanding across Europe and Asia

Based on the data collected for traffic flows, and a variety of other considerations, we narrowed our focus to London, Frankfurt, and Amsterdam, which offer the maximum number of eye-ball networks for cities across Europe. These were successfully deployed in 2016 in a ring topology via the backbone and were connected back to the US through New York and Ashburn as port of entries in the US.

At the same time, we saw an increase in our traffic volumes from Asia in 2016, so we started a similar exercise to what we did for Europe. We decided to expand Dropbox’s edge network across Asia in Tokyo, Singapore, Hong Kong in Q3-2016. These locations were selected to serve local as well as other eyeball networks that use Dropbox within the Asia-pacific region.

Edge Proxy stack architecture

Once we had our PoP locations in place, we built out the new architecture to accelerate our network transfers.

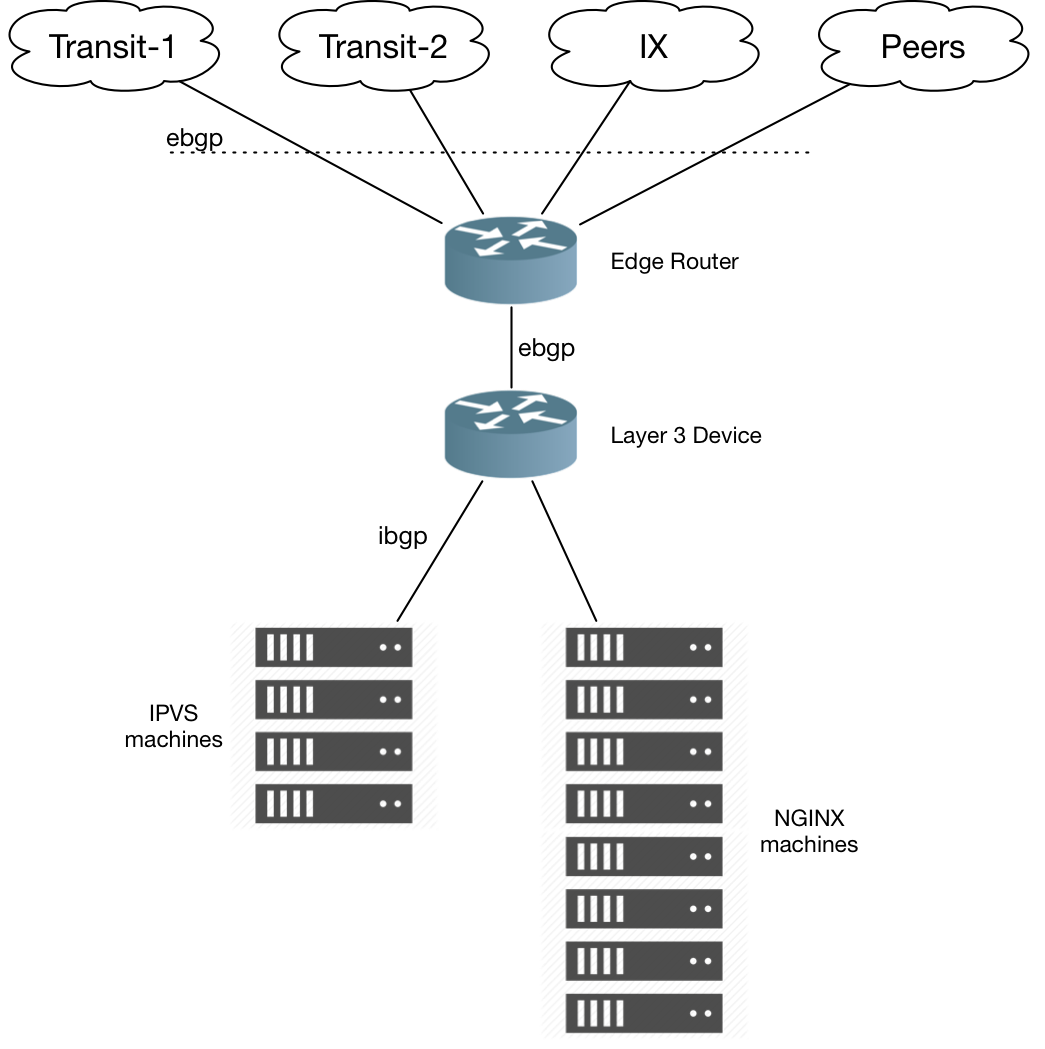

The edge proxy stack handles user facing SSL termination and maintains connectivity to our backend servers throughout the Dropbox network. Edge proxy stack comprises of IPVS and NGINX machines.

More details on proxy stack architecture will be covered in a future blog post.

Next-Gen routing architecture

- Drive more effective utilization of network resources.

- Enable more predictable failure scenarios.

- Enable more accurate capacity planning.

- Minimize operational complexity.

- Users in close proximity of a metro should be routed to the closest PoP within the metro, rather than being served out of any other PoP, which cut down on latency and improved the user experience.

- Some metro’s will have multiple PoPs from redundancy perspective.

- A metro is a failure domain within itself i.e traffic can be shifted away from the metro as needed by withdrawing metro specific prefixes to the internet.

- Prefixes are contained within regions/metro and are only advertised out to the internet from that region/metro.

- To make intra vs inter-routing decisions more apparent.

Standardizing & Optimizing IP transit architecture

Dropbox’s transit capacity until mid-2016 was more uneven and imbalanced than it is today. The IP transit ports in every metro had different capacity, so if we had to drain a metro, we wouldn’t necessarily be able to route traffic to the nearest PoP due to limited capacity. To fix this issue, we standardized the IP transit capacity across all the PoPs to ensure sufficient capacity is available in each PoP. Now, if a PoP goes down or if we have to do a Disaster Recovery Testing (DRT) exercise, we know that we will have enough capacity to move traffic between metros. Dropbox ingress traffic coming from the transit providers was also imbalanced. So we worked in collaboration with our tier-1 providers in implementing various solutions to fix the ingress imbalance into our ASN.

We also re-designed our edge routing policies for IP transit so that a prefix now uses the shortest AS-PATH to exit our ASN between transit providers. If there is a tie between AS-PATH among multiple tier-1 transit providers, then one of the bgp attributes for path selection which is Multi-exit Discriminator ( MED) would be used to break the tie.

Expand Peering

The Edge Network Today

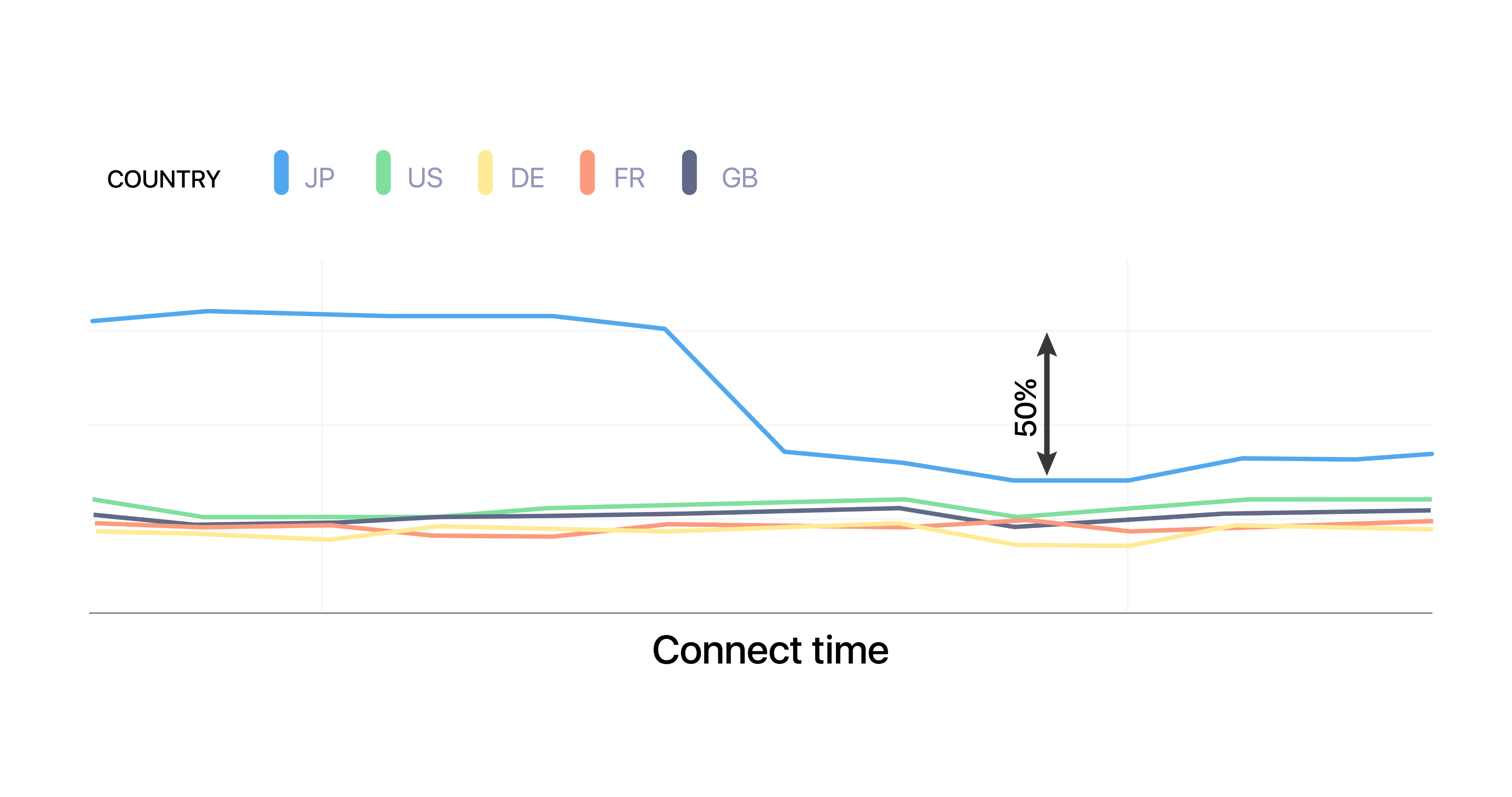

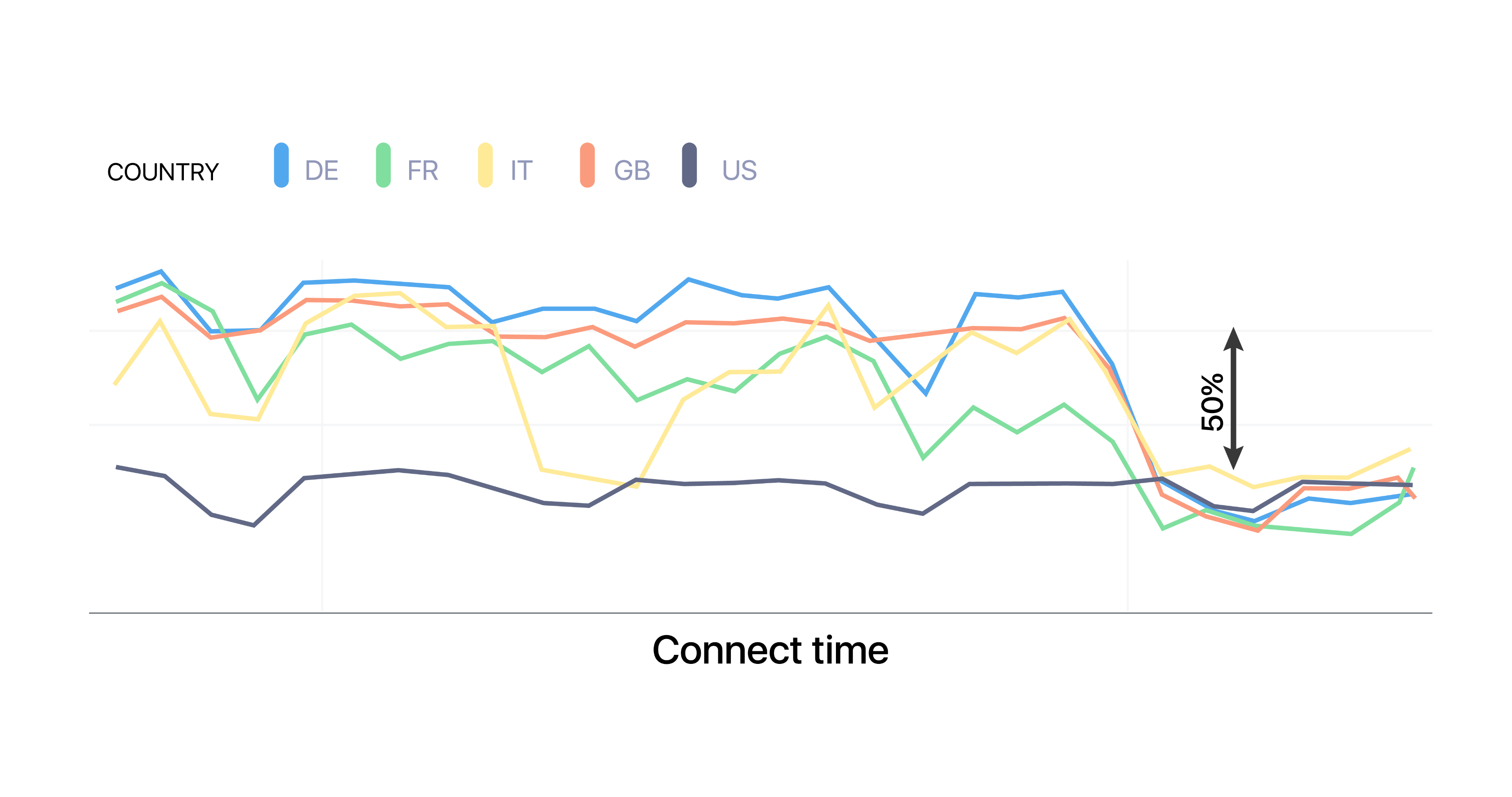

By executing the SSL handshake via our PoPs instead of sending them to our data centers, we’ve been able to significantly improve connection times and accelerate transfer speeds. We’ve tested and applied this configuration in various markets in Europe and Asia. For some users in Europe, for example, median download speeds are 40% faster after introducing edge proxy servers, while median upload speeds are approximately 90% faster. In Japan, median download speeds have doubled, while median upload speeds are three times as fast.

The below graphs show major improvements in the TCP/SSL experience for users in Europe and Asia-pacific after the edge proxy stacks were deployed in every PoP within Dropbox. These graphs plot connect times for different countries (lower is better).

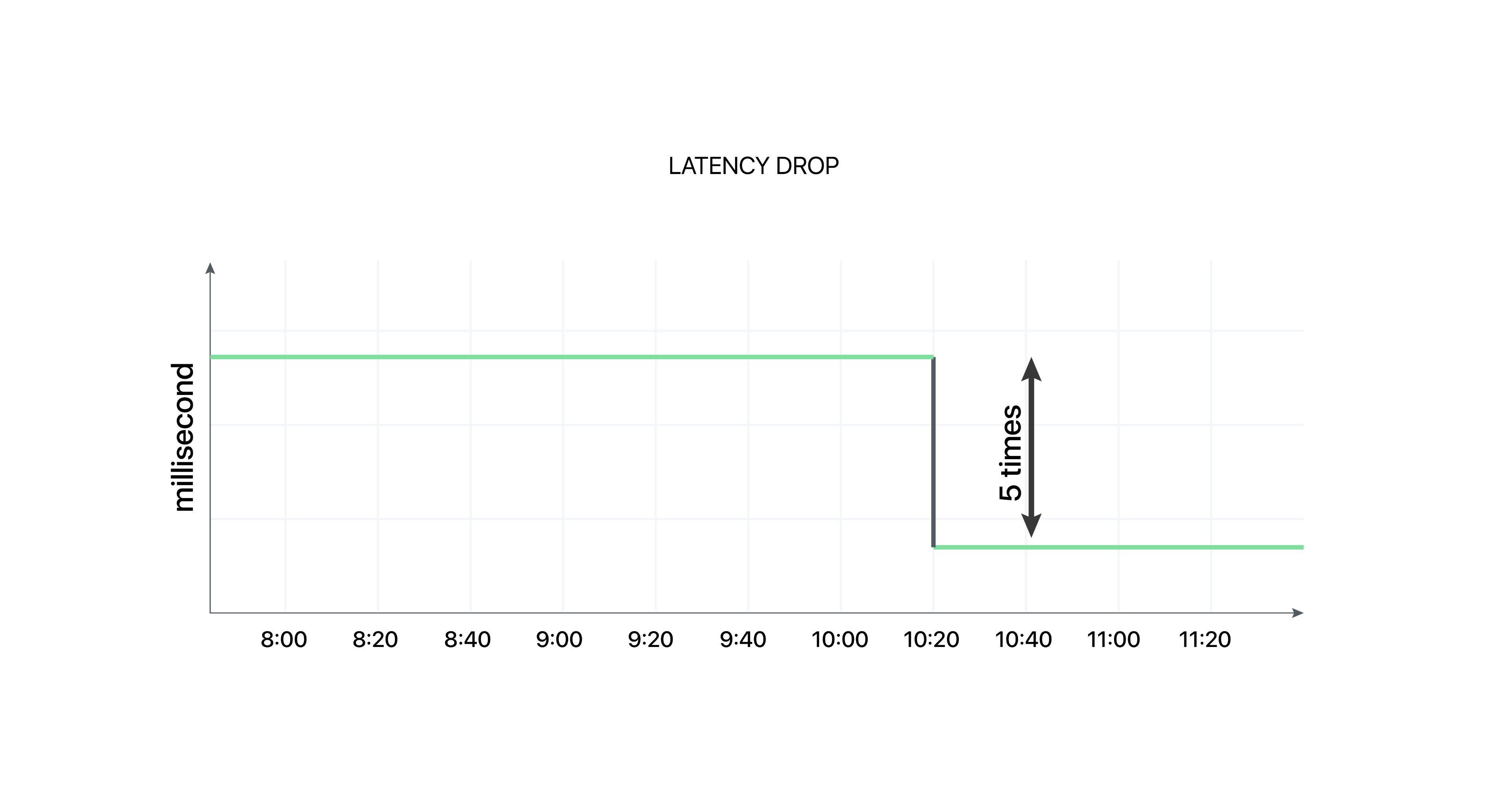

We’ve also heard from several customers across Europe and Asia that their performance to Dropbox has significantly improved since we launched these PoPs. The below graph shows how latency dropped for one of these customers.

By the end of 2016, we had added six new PoPs across Europe and Asia, giving us a total of 14 PoPs and bringing our edge capacity into terabits. We added hundreds of additional gigs of transit, fabric, private peer capacity based on metro/regional traffic ratios and standardized transit footprint across the network. We also added 200+ unique ASN’s via peering. Today, the majority of our Internet traffic goes from a user’s best/closest PoP directly over peering, improving performance for our users and improving our network efficiency.