Open source is not just for software. The same benefits of rapid innovation and community validation apply to hardware specifications as well. That’s why I’m happy to write that the v1.0 of the RunBMC hardware spec has been contributed to Open Compute Project (OCP). Before I get into what BMCs (baseboard management controllers) are and why modern data centers are dependent on them, let’s zoom out to what companies operating at cloud scale have learned.

Cloud software companies like Dropbox have millions, and in some cases, billions of users. When these cloud companies started building out their own data centers, they encountered new challenges with reliability, maintainability, security, and cost operating at such massive scale. Many solutions involved improvements to how operating, monitoring, and repairing machines at scale is performed.

The open data center

Large open source projects have a security advantage over closed source, because there are more people testing—and then fixing—the code. The operating system that virtually all cloud data centers run on is Linux, the world’s largest open source project.

The Open Compute Project was founded in 2011 to create a community to share and develop open source designs and specifications for data center hardware. Historically, companies had to work directly with vendors to independently optimize and customize their systems. As the size and complexity of these systems has grown, the benefits of collaborating freely and breaking open the black box of proprietary IT infrastructure have also grown.

With OCP, contributors to the community can now help design commodity hardware that is more efficient, flexible, secure, and scalable. Dropbox recently joined OCP and presented the RunBMC specification at the 2019 OCP S ummit.

Five years ago when Facebook was designing a new data center switch, open source hadn’t yet reached the baseboard management controller. The BMC is a small hardware component that serves as the brains behind the Intelligent Platform Management Interface (IPMI) which specifies how sysadmins can perform out-of-band management and monitoring of their systems.

BMCs do a lot of telemetry on servers like checking temperature ranges, voltage, current, and other kinds of health checks. Admins typically monitor these statistics through IPMI, issuing commands through a network topology that's separate and insulated from the communication between the server’s CPU, firmware, and operating system. The problem is, there are a ton of security vulnerabilities in the IPMI stack, and that stack has historically been a black box of closed source software.

The Facebook engineers realized that the best way to confront these risks, and to write more maintainable code, was to open up the software that runs on BMCs and reduce the surface area for vulnerabilities. What they found was a lot of bugs and a lot of bloat caused by unnecessary features that had accumulated over time. Instead of fixing all the bugs, they decided to start over and write a new stack on top of one of the baseboard development packages that chip vendors make available.

OpenBMC, the resulting open source project, started at a 2014 Facebook hackathon to solve scaling challenges for the new switch. From there it has grown into a larger project supported by IBM, Intel, Google, Microsoft, and Facebook that not only addresses the needs of cloud providers, but also enterprise, telco, and high-performance computing (HPC) data centers as well. OpenBMC provides a common software management stack for BMCs, which has been a big win for people designing data centers, but we thought a common hardware approach could do even more. With RunBMC, we now have a hardware specification that defines the interface between BMC subsystems and the compute platforms they need to manage. This post describes how we got there.

BMC hardware

The BMC is usually built into a system on a chip (SOC), and a lot of the functionality that's provided is common across systems. You have RMII and RGMII to provide Ethernet interfaces; some type of boot flash over SPI NOR; various IOs to toggle devices; PCI Express and LPC to talk to the host, and a lot more.

Taking a closer look at schematics, layouts, and systems, we performed a deep dive into many of the OCP BMC sub-systems, and tried to understand how they are being used in different implementations.

How are they the same, how are they different, and what are the requirements that would drive towards a standard for all OCP platforms? We came up with some different types of ideas for inputs, outputs, interrupts, serial buses, etc., until we could ask, What is the ideal interface all platforms could leverage?

BMC system topologies look very similar, but once you dig into the details, they deviate on a platform by platform basis. And all these variances trickle down into code and management challenges, and over time your software stack becomes more complex. Once you standardize the hardware interface, you have consistent interfaces which can drive more consistent software.

Open source with benefits

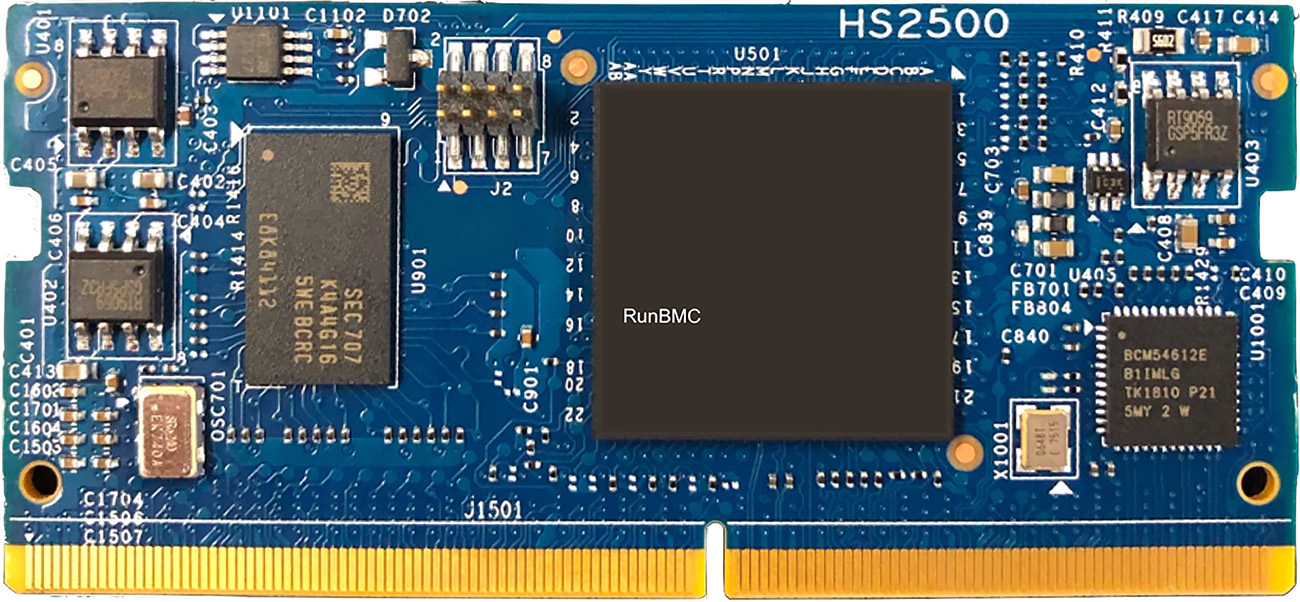

Based on this analysis, we developed the first prototype and presented it at the 2018 OCP Summit. The prototype had a super high-density connector with a super small pitch, and it generated a lot of interest. Sharing that prototype at OCP really started the conversation and drove the project forward to where we are today.

Through the OCP community we were able to connect with a very wide audience to refine our concept. Based on all of this feedback, we’ve identified the biggest wins for RunBMC.

Security

There’s a proliferation of hardware devices and SKUs in contemporary data centers, all with different BMCs. Last summer’s controversies over potential hardware vulnerabilities built into server networks have people paying close attention to the BMC subsystem. Having a standardized BMC interface will enable companies to “lock down” their BMC subsystem.

Having a design that's more stable, that rolls at a slower cadence than compute platforms, lets you harden your BMC. It’s really difficult in that environment to control everything and guard against vulnerabilities like man in the middle attacks. By focusing security efforts on a single BMC card, system designers can standardize their own software management stack.

Modularizing the BMC subsystem will also make it easier for designers to add hardware security features to the module. You can harden anything that's prone to attack, like the BIOS flash, by adding peripherals like a Titan, Cerberus, or TPM security chip that monitors all the traffic going back and forth from the IO to the reference board.

Supply chain

Imagine designing a server and going to the OCP marketplace and picking from two or three BMCs and being able to place an order for a million of them. For some of the larger cloud companies, that’s a very attractive scenario.

The other important point here is that when the industry adopts these open hardware standards, they drive down cost and complexity for everyone else. Dropbox may not be the first in line to put RunBMC into production, but when we do, we’ll benefit from the economies of scale created by the companies that were first in line.

Faster industry innovation

One of the things that’s been particularly exciting and gratifying about this project is to see how quickly hardware companies have embraced it. At the OCP Global Summit there was multiple booths showing next generation servers supporting RunBMC.

So not only does this kind of open innovation have downstream supply chain benefits across the industry, it also allows relatively small players like Dropbox to have positive impact on the hardware designs of much bigger players in our industry.

The RunBMC specification

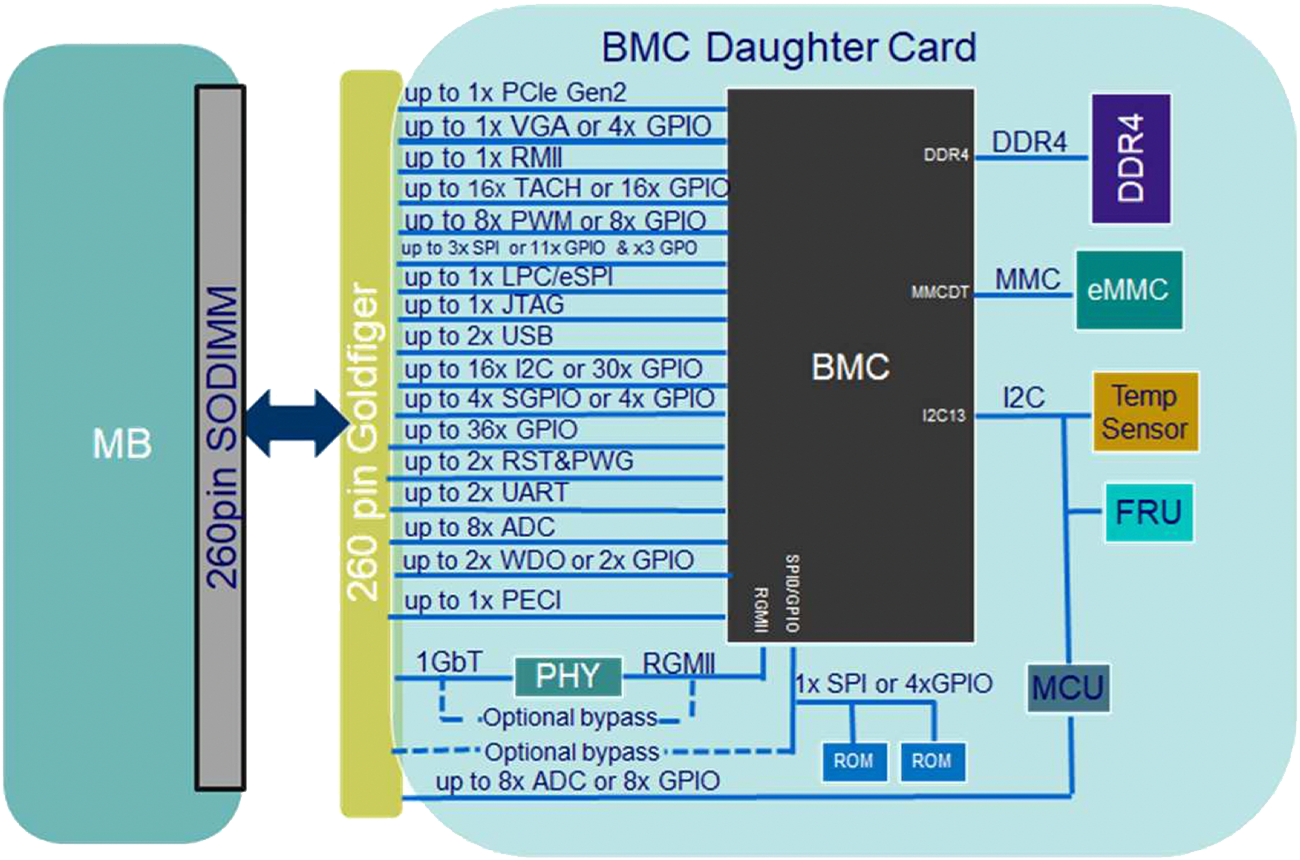

The RunBMC specification defines the interface between BMCs and their respective platform.

In the image below the double arrow shows all the IO connectivity that we've standardized, and this should cover 95-99% of platform BMC requirements.

The spec uses a 260 pin DDR SO-DIMM connector with relaxations in some of the dimensions.

This form factor can fit vertically in a 1RU, or 2RU form factor servers, but you can also find connectors that are right angles and different type of angles. So there’s a lot of flexibility in the placement inside platforms.

The image below shows the interfaces in the specification. RGMII, RMII, LPC, eSPI, PCI Express, USB, as well as various serial interfaces and GPIOs, ADCs, PWMs, and TACHs are all provided through the RunBMC interface.

| Function | Signal Count for Interface | Number of Interfaces | Number of used pins |

|---|---|---|---|

| Form Factor - 260 SO-DIMM4 | |||

| Power 3.3V | 5 | ||

| VDD_RGMII_REF | 1 | ||

| LPC 3.3v or ESPI 1.8v | 1 | ||

| Power 12 V | 1 | ||

| Ground | 38 | ||

| ADC | 1 | 8 | 8 |

| GPI/ADC | 1 | 8 | 8 |

| PCIe | 7 | 1 | 7 |

| RGMII/1GT PHY | 14 | 1 | 14 |

| VGA / GPIOs | 7 | 1 | 7 |

| RMII/NC-SI | 10 | 1 | 10 |

| Master JTAG/GPIO | 6 | 1 | 6 |

| USB host | 4 | 1 | 4 |

| USB device | 3 | 1 | 3 |

| SPI1: SPI for host - quad capable | 7 | 1 | 7 |

| SPI2: SPI for host | 5 | 1 | 5 |

| FWSPI: SPI for Boot - quad capable | 7 | 1 | 7 |

| SYSSPI: System SPI | 4 | 1 | 4 |

| LPC/eSPI | 8 | 1 | 8 |

| I2C / GPIOs | 2 | 12 | 24 |

| GPIOs / I2C | 2 | 3 | 6 |

| I2C | 2 | 1 | 2 |

| UARTs (TxD, RxD) | 2 | 4 | 8 |

| CONSOLE (Tx, Rx) | 2 | 1 | 2 |

| GPIO / Pass-Through | 2 | 2 | 4 |

| PWM | 1 | 8 | 8 |

| Tacho/GPIOs | 1 | 16 | 16 |

| PECI | 2 | 1 | 2 |

| GPIOs | 1 | 33 | 33 |

| GPIO/GPIO Expanders (Serial GPIO) | 4 | 1 | 4 |

| Reset and Power Good | 1 | 3 | 3 |

| Watchdogs/GPIO | 1 | 2 | 2 |

| BOOT_IND# / GPIO | 1 | 1 | 1 |

| RESERVED/KLUDGE | 1 | 1 | 1 |

The open road ahead

Now that the RunBMC spec is published we expect to see contributions of RunBMC reference boards submitted by the community. We’re glad to be part of OCP so we can share what we're learning as we scale our infrastructure and carve the path for more secure, energy-efficient, and cost-effective hardware.