Making Carousel highly responsive was a critical part of providing an awesome user experience. Carousel wouldn’t be as usable or effective if the app stuttered or frequently caused users to wait while content loaded. In our last post, Drew discussed how we optimized our metadata loading pipeline to respond to data model changes quickly, while still providing fast lookups at UI bind time. With photo metadata in memory, our next challenge was drawing images to the screen. Dealing with tens of thousands of images while rendering at 60 frames per second was a challenge, especially in the mobile environments of iOS and Android. Today we are going to take a look at our image decoding and rendering pipeline to provide some insight into the solutions we’ve built.

BACKGROUND: When work on Carousel first started, we set three key implementation goals for ourselves:

- Data availability: Users shouldn’t need to wait for data

- Data presentation: Scrolling should always be smooth - we should always be able to maintain a frame rate of 60 frames per second

- Data fidelity: Users should always see high fidelity images

It was incredibly important to us that we meet these goals in the main Carousel views as the user scrolls through their photos. The task at hand will be familiar to those who have worked with drawing before: decode the thumbnails, which we store as JPEGs for data compression purposes, and display them in the UI. In general we lay out images three in a row in the main Carousel view. To determine what thumbnail size to use, we ran some UI experiments on modern phones such as the iPhone 5 or the Nexus 5, and decided that the cutoff resolution for a high fidelity thumbnail would be around 250px by 250px - anything lower resolution would look degraded in quality to the eye. Given the fact that Dropbox always pre-generates 256px by 256px thumbnails for all uploaded photos and videos, we were leaning toward using 256px by 256px thumbnails. To further validate this choice, we tested the network time needed to download such a thumbnail (~0.1s in batch over wifi), size on disk (~28KB), time to decode such a thumbnail (9ms on iPhone 5), and memory consumption after being decoded into a bitmap (0.2MB). All numbers looked reasonable, and we decided to go with these 256px by 256px thumbnails. Those who have worked with intensive image drawing might have predicted that image decoding would be a problem for us. And sure enough! While JPEGs are efficient for data compression, they are also expensive to decode into pixel data. As a data point, decoding a 256px by 256px thumbnail on a Nexus 4 takes about 10ms. For a 512px by 512px thumbnail, this increases to 50ms. A naive implementation might try to draw 256px by 256px thumbnails on the main thread synchronously. But in order to render at 60 frames per second, each frame needs to be rendered in 16ms. In a single frame, when a row of three thumbnails appears on screen, we must decode 3 thumbnails. With the naive implementation at 10ms per thumbnail, it would take 30ms to render that frame. You could see immediately that such an approach wouldn’t work without dropping frames and losing smooth scrolling.

Naive Approach

- Good for: Data availability, and data fidelity

- Bad for: Data presentation

FIRST SOLUTION A fairly standard approach to the problem above is to offload the decoding of the 256px by 256px thumbnails to a background thread. This frees up the main thread for drawing and preserves smooth scrolling. However, this yields a separate problem of not having content to display to the users as they scroll. Have you ever scrolled really quickly in an app and only seen placeholder squares where you should see images? We call this the “gray squares” problem and we wanted to avoid it in Carousel.

First Solution: Background queue

- Good for: Data presentation and data fidelity

- Bad for: Data availability

SECOND SOLUTION It became clear that if we wanted scrolling to be smooth we had to render in the background, but latency was an issue with that approach. What could we do to hide this? One idea was that if we couldn’t decode fast enough, we could decode less. Again we ran some UI experiments to find the lowest resolution thumbnails that looked degraded to the eye but still gave a high enough level of detail for a user to be able to understand the content of the photo. Turns out this is about 75px by 75px. We wanted these to be square thumbnails because they were displayed as square thumbnails in most Carousel views, and we didn’t want to decode any more than what needed to be displayed. Another advantage of having small thumbnails is that the variance of JPEG file size is smaller, so every image takes roughly the same amount of time to decode. Furthermore, we already pre-generated 75px by 75px size thumbnails on the server. Thus we decided to download and cache a 75px by 75px thumbnail along with a 256px by 256px thumbnail for each image.

The 75px by 75px thumbnails takes roughly 1/5 of the time to render compared to 256px by 256px thumbnails, a big performance win gained at the cost of image quality. Here was the dilemma: just using those small thumbnails alone would go against our goal of data fidelity, but rendering big thumbnails would be too slow when the user scrolls quickly. We intuited that a user scrolling quickly would prefer to see a preview of each thumbnail, rather than nothing at all. So, what if we detect when the user scrolls quickly, and render 75px by 75px thumbs on the main thread on demand? Since it’s blazingly fast to render these low-resolution thumbnails (~2.7ms on iPhone 5), we could still preserve smooth scrolling. As soon as we detect the user is scrolling slowly, we add a rendering operation for 256px by 256px thumbnails onto a queue which is processed by a background thread. Decoding work is processed one by one from the beginning of the queue. As the user scrolls, new thumbnails will queue at the beginning since it’s most urgent to decode them. In order to only render relevant 256px by 256px thumbnails, we dequeue the stale requests as images go off the screen. This tight connection with UI ensures that no extra work is done to process offscreen thumbnails To further ensure no extra work is done and reduce CPU resource utilization, we only render the larger thumbnails when the user is likely to see them. We check if the user is scrolling too quickly by listening to draw callbacks (CADisplayLink on iOS) and measuring the difference in scroll offset by time.

Second Solution: Background queue + low resolution thumbs on main thread

- Good for: Data presentation, data availability, and data fidelity

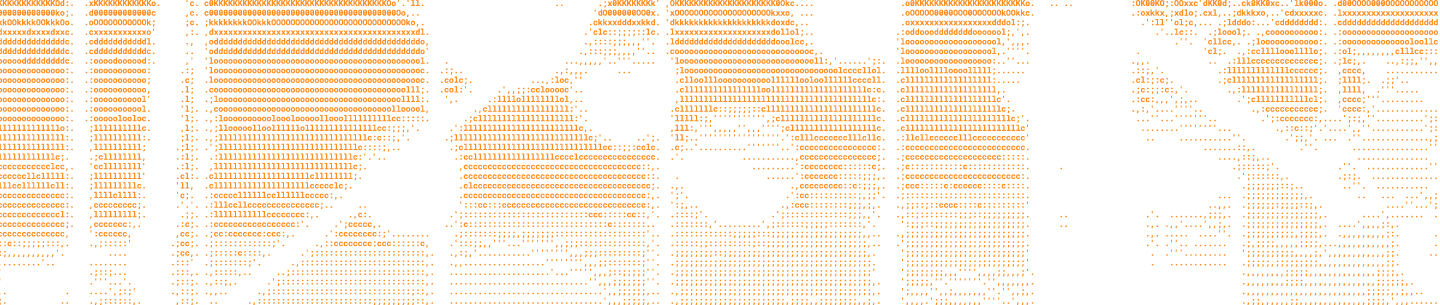

WHAT WE BUILT We ran with the last approach and built an image renderer that contains a queue of 256px by 256px rendering jobs. After experimentation we settled on caching the resulting bitmaps, with a configurable cache size, which allows us to hold on to the most recently decoded thumbnails. In case the user scrolls back and forth, we don’t need to render the same thing again. As the diagram below indicates, when the user scrolls an image onto the screen, we check if we have the high-resolution bitmap already rendered first. If we do, we just display that already rendered image. If not, we render the 75px by 75px thumbnail on the main thread synchronously, and only queue the 256px by 256px thumbnail in the background if the user is scrolling slowly. If the user scrolls fast, we don’t queue the rendering jobs associated with 256px by 256px thumbnails until the scrolling slows down. As the user scrolls slowly, the background render queue doesn’t have much work to do, so the low-resolution to high-resolution swapping happens almost immediately. As the user flings really fast, nothing gets into the render queue, since we only display low-resolution thumbnails as they fly by quickly. As the user scrolling slows down, the background render queue starts to be fed with relevant on-screen thumbnails, so low-resolution to high-resolution thumbnail swapping is almost seamless. Rendering jobs are also dequeued as the associated thumbnails go off the screen, so the render queue only has a maximum of a screen-full of decode jobs.