Dropbox is well known for storing users’ files—but it’s equally important we can retrieve content quickly when our users need it most. For the Retrieval Experiences team, that means building a search experience that is as fast, simple, and powerful as possible. But when we conducted a research study in July 2022, one of the most common complaints was that search was still too slow. If search was faster, these users said, they would be more likely to use Dropbox on a regular basis.

At that time, we found it took ~400-450ms (p75) for the search webpage to submit a query and receive a response from the server—far too slow for our users who expected quicker results. It sent us looking for ways that search latency could be improved.

In our early analysis, we learned that of the time it took to fetch search query results, roughly half of that time was spent in transit to and from Dropbox servers (a.k.a. network latency) while the other half was spent on determining which search results to return (a.k.a. server latency). We decided to tackle both sides of the equation simultaneously. While some of our colleagues explored ways to reduce server latency, we investigated network latency.

Search’s total latency is comprised of server time and network time

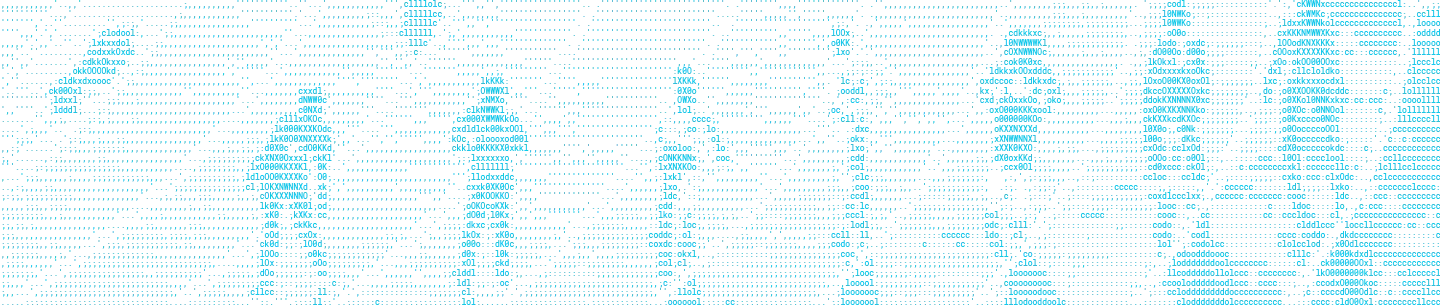

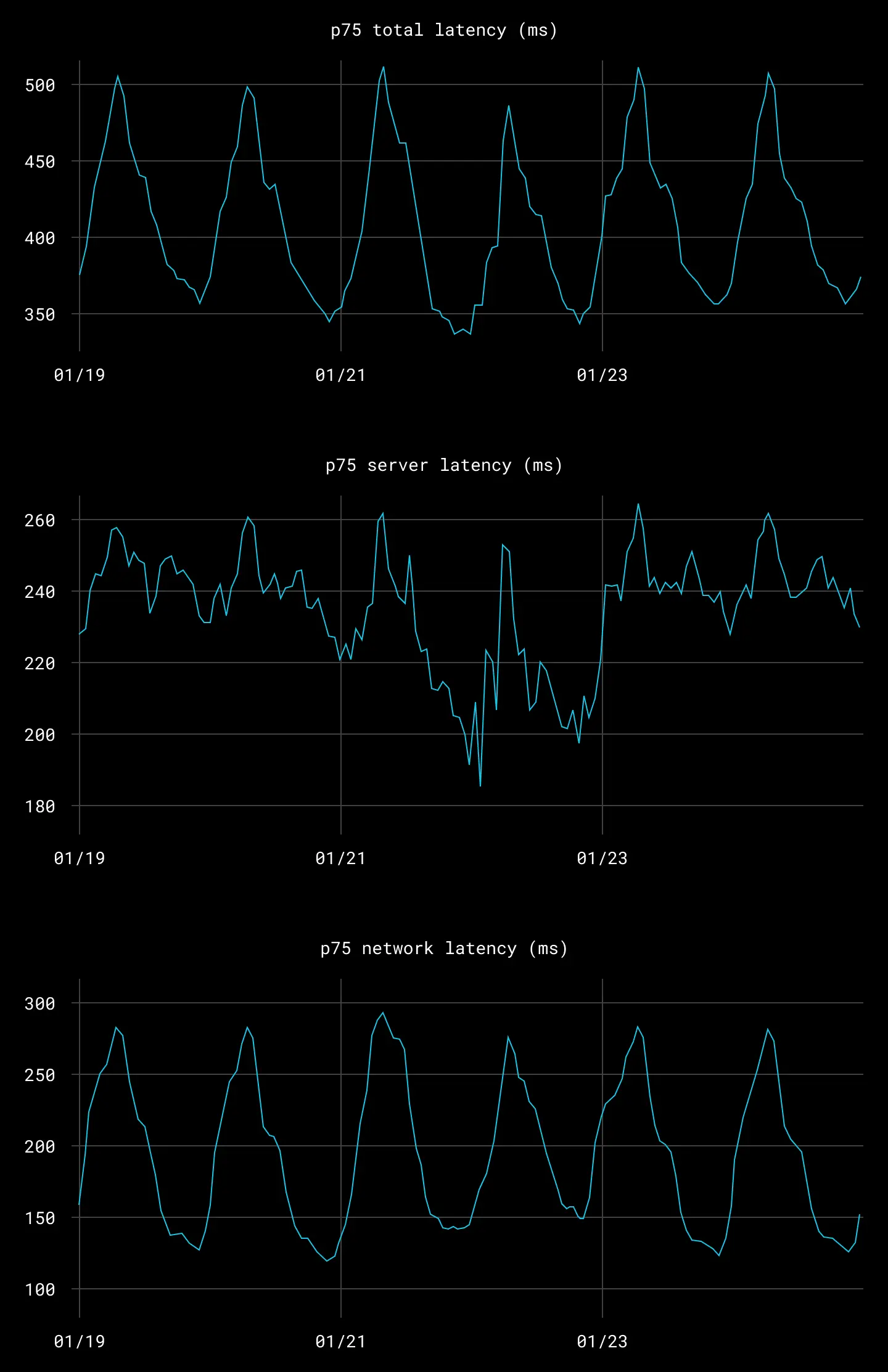

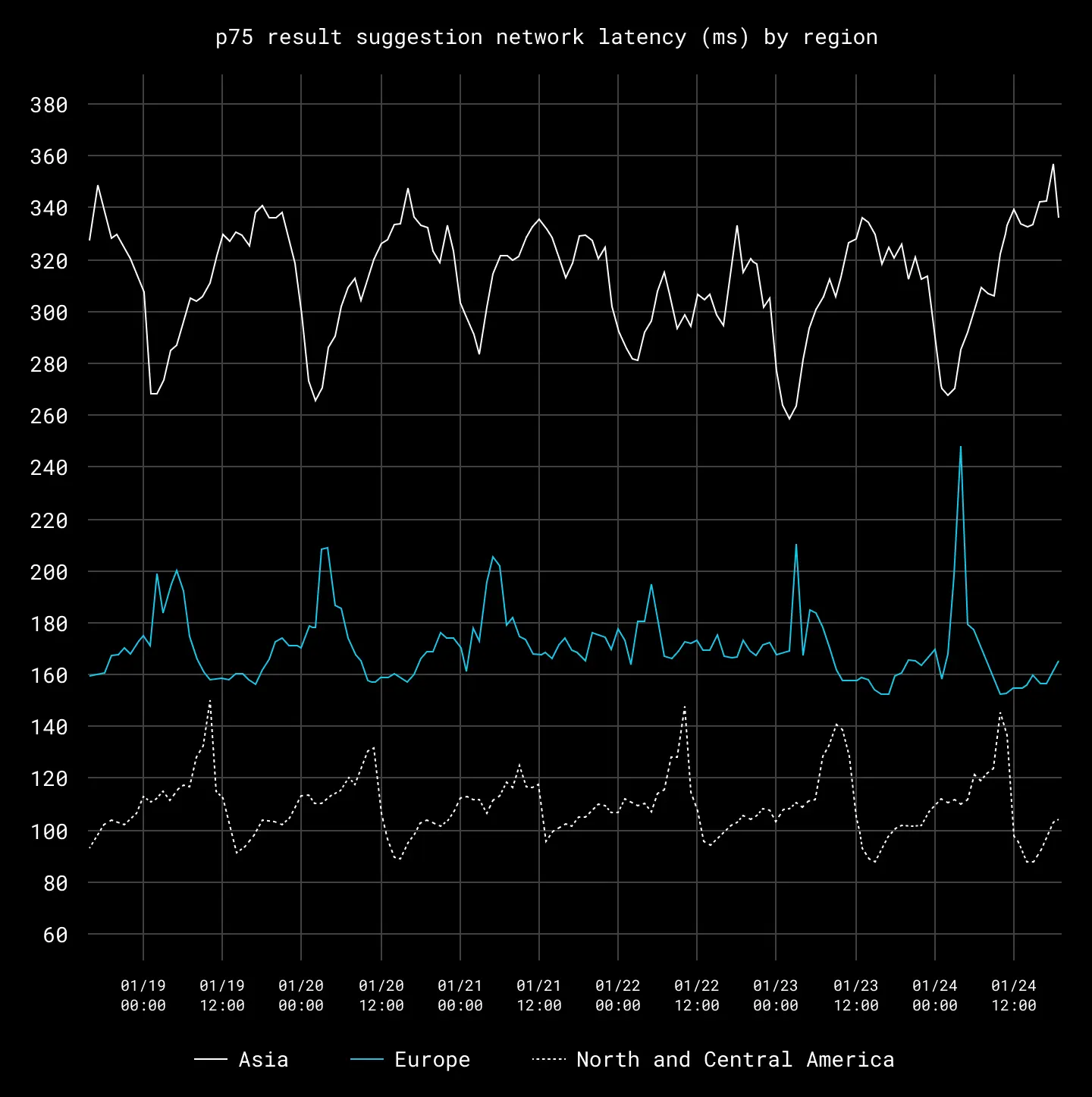

Network latency is significantly more variable than server latency. It depends on local network conditions, the user’s distance from a Dropbox datacenter, and even the time of day. During business hours, many users work at offices with strong internet connections, but at night, they are at homes with weaker internet connections. Compared to North America—where the majority of Dropbox data centers are located—latencies can be up to twice as high in Europe and three times as high in Asia. Considering 25% of search requests originate from Europe and 15% originate from Asia, a significant portion of Dropbox users would benefit from lower network latencies.

At this point, we realized that we couldn’t tackle our network latency issues alone. In collaboration with the Traffic team, we considered our options and decided to test a possible solution: HTTP3.

Regional differences in network latency

A hypothetical speed boost

Dropbox.com currently uses HTTP2, a protocol based on TCP. The latest version, HTTP3, uses UDP. This speeds up the time to establish connections and serve parallel requests by:

- Introducing Zero Round Trip Time (0RTT) at the beginning of connections. Compared to HTTP2, HTTP3 makes one fewer round trip because it avoids the three-way handshake mandatory for TCP-based protocols. Furthermore, with 0RTT, subsequent HTTP3 connections establish a secure connection and make the actual request in the same packet, whereas in HTTP2, these pieces of data must be sent separately.

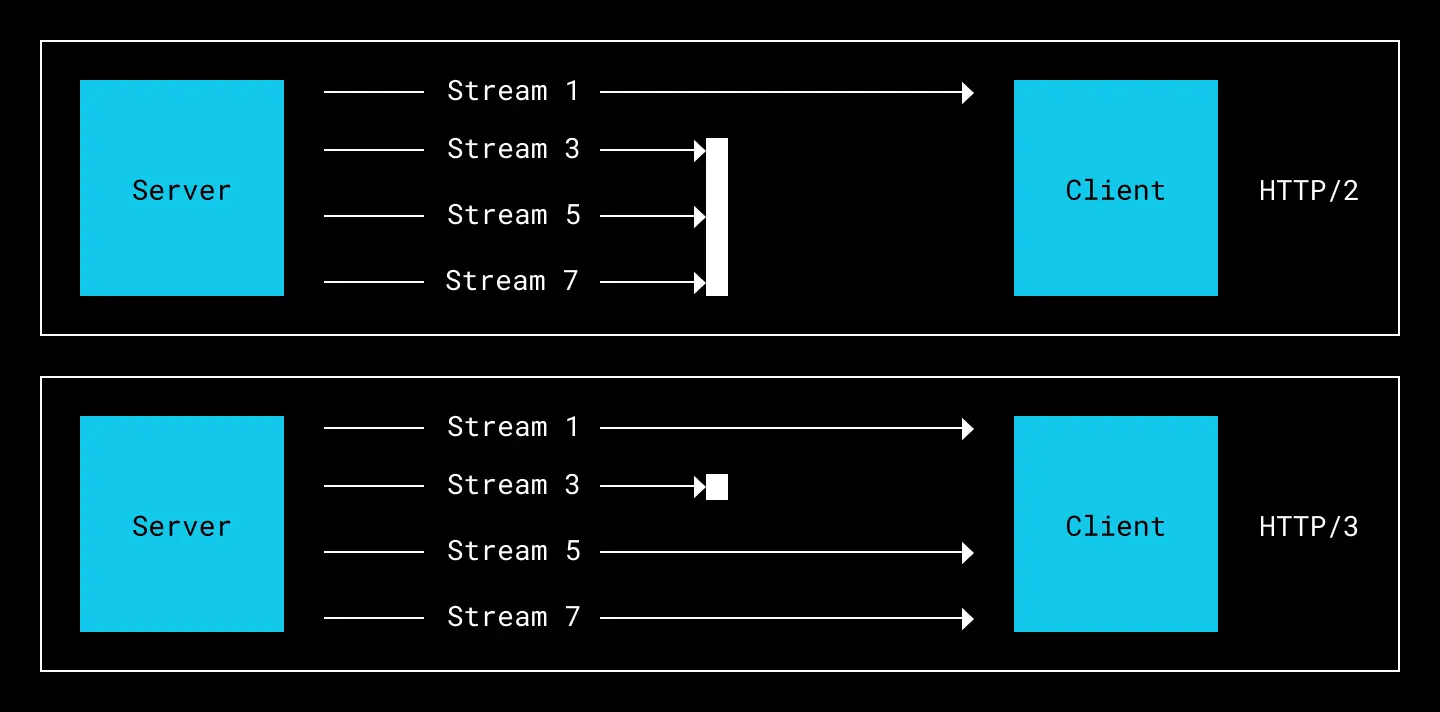

- Eliminating head-of-line blocking. TCP is stream-oriented and thus requires packets to be processed in a strict order. If a packet in one stream is lost, packets in subsequent streams could be delayed in the client’s TCP stack, even if the streams are unrelated to each other. But with UDP, if one stream is blocked, other streams can still deliver data to the application.

Head-of-line blocking: In HTTP2, a blocked stream also delays subsequent streams, whereas in HTTP3, a blocked stream only affects that stream

HTTP3 sounded promising. In theory, it could not only speed up search requests but also operations across all of Dropbox—from file uploads to content suggestions. However, it was unclear what the real world impact would be. It was entirely possible—albeit unlikely—for HTTP3 to be slower than HTTP2.

We needed to be sure that Dropbox would benefit from a migration to HTTP3. Rather than take an unknown leap, we decided to test HTTP3 on a portion of Dropbox traffic first.

Setting up the experiment

To evaluate the performance of HTTP3 on Dropbox servers, the Traffic team created a test subdomain that served our main website with HTTP3. The test site was specifically designed so that we could safely make specific API requests over HTTP3 without negatively impacting users of the main website.

As part of this test site, we built a no-op API endpoint that could successfully leverage HTTP3. Because the server doesn’t perform any operations, server latency would be near zero—meaning any remaining latency would be network latency. With this endpoint in place, we then devised our HTTP3 test involving a series of actions meant to simulate typical request traffic on our website—including when a user performs a search. The simulation had three phases:

- Setup. First, we pre-warmed the cache by firing off two sequential HTTP3 requests, ignoring any timing data. This was done purely to warm up any networking caches related to the HTTP2 and HTTP3 servers equally, ensuring that subsequent HTTP2 vs. HTTP3 testing was a fair comparison. This is specifically necessary for our test because the first connection is always HTTP2; that’s when the client receives information required to support HTTP3. All subsequent connections would then try to use HTTP3.

- Running the HTTP2 control. We then ran five parallel HTTP2 requests to the no-op API endpoint and logged the network time for each request. This simulated how users currently get data from our servers, and thus was our control.

- Running the HTTP3 experiment. Finally, we ran another five parallel requests to the no-op API endpoint, but this time via HTTP3. We logged the elapsed network time for each request to compare against HTTP2.

The most important aspect of this test was that the requests were made in parallel. This would simulate real-world scenarios at Dropbox, where many parallel requests are fired with each interaction with Dropbox web. But more importantly, it would help us determine whether eliminating head-of-line blocking would actually speed up parallel requests; if these requests were not faster, it was unlikely HTTP3 would help us in practice.

To prevent any impact to user-facing performance, we only allowed our HTTP3 tests to be conducted once per page load, and only after the user completed a search. We ran the experiment for roughly two weeks between December 2022 and January 2023. Traffic regularly exceeded 1,500 queries per second (QPS) at peak times, and we successfully collected data from a wide sample of users around the world.

Comparing the results

Over the course of our two-week experiment, 300,000 HTTP3 requests were fired per day.

For the majority of our global users, HTTP3 reduced network latencies by 5-15ms (or 5%). While this is an improvement, these wins would appear negligible to the average user. At p90, however, HTTP3 demonstrated massive improvements, with a latency reduction of 48ms (or 13%)—and at p95, a reduction of 146ms (21%). This could be explained by the fact that HTTP3 is better at handling packet drops in parallel connections by eliminating head-of-line blocking; because packet drops are more likely to occur in networks with suboptimal connection quality, the benefits of HTTP3 are more visible at the higher percentiles.

| HTTP3 vs. HTTP2 | |

|---|---|

| p25 | -4.23ms / -4.73% |

| p50 | -5.55ms / -4.15% |

| p75 | -13.1ms / -5.78% |

| p90 | -47.6ms / -12.5% |

| p95 | -146ms / -20.9% |

The results are even more prominent when split by region at the higher percentiles. HTTP3 significantly reduced network latencies for Asia by around 77ms at p90 and by 200ms at p95. Other high-traffic regions like Europe and North and Central America experienced smaller absolute improvements, though the relative improvements are similar across the board (22% at p95).

| HTTP3 vs. HTTP2 | North and Central America | Europe | Asia |

|---|---|---|---|

| p25 | -3.20ms / -6% | -2.34ms / -2% | -3.73ms / -2% |

| p50 | -4.21ms / -5% | -3.84ms / -3% | -5.12ms / -2% |

| p75 | -9.03ms / -8% | -11.1ms / -6% | -15.0ms / -4% |

| p90 | -44.9ms / -17% | -47.3ms / -13% | -77.3ms / -14% |

| p95 | -118ms / -22% | -141ms / -21% | -200ms / -22% |

What’s next for HTTP3

Our experiment successfully demonstrated that HTTP3 significantly improved latency at the 90th percentile and above. Even though HTTP3 noticeably reduces latencies for only 10% of our users, these will be the users who suffer from high latencies and will appreciate improvement the most. The biggest beneficiaries of HTTP3 would be our international users, since the highest latencies are disproportionally found outside of North America.

We gained two major insights from our large-scale experiment:

- The benefits of 0RTT are less important because nearly all connections to dropbox.com are long-lived.

- The way HTTP3 handles head-of-line blocking significantly reduced latencies, especially in networks where packets drops are more likely to occur.

At the beginning of our investigation into network latency, we only knew the hypothetical benefits of HTTP3. Now we have a better understanding of the actual impact that HTTP3 can bring—not only to Search, but all of Dropbox, including file operations and content suggestions with machine learning. Given the sizable performance benefit for users in our p90+, Traffic is now planning a production-ready buildout of HTTP3.

This high-impact project is the result of Dropboxers working together across several teams (specifically, Retrieval Experiences and Traffic). We’d like to give special thanks to Roland Hui, Sarah Andrabi, Khugan Shanmugeswaran, the NetEng team, and the Security team for helping us turn this theoretical investigation into a reality.

~ ~ ~

If building innovative products, experiences, and infrastructure excites you, come build the future with us! Visit dropbox.com/jobs to see our open roles, and follow @LifeInsideDropbox on Instagram and Facebook to see what it's like to create a more enlightened way of working.